Dear friends,

I spoke last week at re:MARS, Amazon's conference focusing on machine learning, automation, robotics, and space. I heard great talks from Jeff Bezos, Kate Darling, Ken Goldberg, Marc Raibert, and others.

And . . . I got to sit in Blue Origin’s space capsule!

I spoke about taking AI to industries outside the software industry, like manufacturing, logistics, and agriculture. Even though we’ve had a lot of exciting breakthroughs in machine learning, shipping AI products is still hard. There’s a big gap between research results and practical deployments.

One of the biggest problems is generalizability — the real world keeps giving us test data that’s different from anything we saw when building the models. In order to take AI to every industry, we as a community still have important work to do to bridge this gap.

Looking forward to heading to ICML this Friday!

Keep learning,

Andrew

News

Vacation for Videographers

Another week, another way to make deepfakes. A team at Samsung recently proposed a model that generates talking-head videos by imposing facial landmarks over still images. Now a different team offers one that makes an onscreen speaker say anything you can type.

What’s new: Ohad Fried and fellow researchers at Stanford, Adobe, and Princeton unveiled a system that morphs talking-head videos to match any script. Simply type in new words, and the speaker delivers them flawlessly. Check out the video.

How it works: Given a video and a new script, the model identifies where to make edits and finds syllables in the recording that it can patch together to render the new words. Then it reconstructs the face. The trick is to match mouth and neck movements with desired verbal edits while maintaining consistent background and pose:

- Face tracking obtains facial properties — orientation, shape, and expression — as well as environmental characteristics like lighting.

- Given new text, the system searches over a transcript of the original recording for phonemes useful in constructing the new words. Then it extracts the corresponding frames and facial features and substitutes them for the originals.

- Extracted frames can change both the background and speaker’s pose. The system mitigates such artifacts by further swapping in nearest neighbours from the original video around the edit location.

- In parallel with these modifications, the system interpolates extracted facial features with original features at the edit location to produce a smoother sequence.

- A recurrent GAN replaces mouth and neck movements in the nearest-neighbor images to those matching the interpolated facial parameters.

- For now the system works on video only. The researchers re-recorded the speaker’s voice or synthesized a new one to fill in the sound track. But there may be better options.

Results: Fried’s new technique allows more flexible editing and creates more detailed reconstructions than earlier methods. Test subjects barely noticed the editing.

Limitations: The approach only reshapes in the mouth and neck region (and the occasional hand entering that region). That leaves room for expressive inconsistencies like a deadpan face when the new script calls for surprise.

Why it matters: Video producers will love this technology. It lets them revise and fix mistakes without the drudgery of re-recording or manually blending frames. Imagine a video lecture tailored to your understanding and vocabulary. Producing a slightly different lecture for each student would be a monumental task for human beings, but it’s a snap for AI.

We’re thinking: It goes without saying that such technology is ripe for abuse. Fried recommends that anyone using the technology disclose that fact up-front. We concur but hold little optimism that fakers will comply.

AI’s Steep Energy Cost

Here’s a conundrum: Deep learning could help address a variety of intractable problems, climate change among them. Yet neural networks can consume gargantuan quantities of energy, potentially dumping large amounts of heat-trapping gas into the atmosphere.

What’s new: Researchers studying the energy implications of deep learning systems report that training the latest language models can generate as much atmospheric carbon as five cars over their lifetime, including manufacturing.

How bad is it? Training a Transformer with 65 million parameters generates 26 pounds of carbon dioxide equivalent, a bit more than burning a gallon of fuel. OK, we’ll bike to work. But a Transformer roughly four times the size tuned using neural architecture search generated 626,155 pounds (a car produces 126,000 pounds from factory to scrapyard). To make matters worse, developing an effective model generally requires several training cycles. A typical figure is around 78,000 pounds, the researchers conclude.

How they measured: Emma Strubell, Ananya Ganesh, and Andrew McCallum at the University of Massachusetts Amherst considered Transformer, BERT, ELMo, and GPT-2. For each model, they:

- Trained for one day.

- Sampled energy consumption throughout.

- Multiplied energy consumption by total training time reported by each model’s developers.

- Converted total energy consumption into pounds of CO2 equivalent based on average power generation in the U.S.

The debate: The conclusions sparked much discussion on Twitter and Reddit. The researchers based their estimates on the average U.S. energy mix. However, some of the biggest AI platforms are far less carbon-intensive. Google claims its AI platform runs on 100 percent renewable energy. Amazon claims to be 50 percent renewable. The researchers trained on a GPU, ignoring the energy efficiency of more specialized chips like Google’s TPU. Moreover, the most carbon-intensive scenario cost between $1 million and $3 million — not an everyday expense. Yes, AI is energy-intensive, but further research is needed to find the best ways to minimize the impact.

Everything is political: Ferenc Huszár, a Bayes booster and candidate for the International Conference on Machine Learning’s board of directors, tried to take advantage of the buzz. He proposed “phasing out deep learning within the next five years” and advised his Twitter followers to “vote Bayesian” in the ICML's upcoming election. ¯\_(ツ)_/¯

We’re thinking: This work is an important first step toward raising awareness and quantifying deep learning's potential CO2 impact. Ever larger models are bound to gobble up energy saved by more efficient architectures and specialized chips. But the real issue is how we generate electricity. The AI community has a special responsibility to support low-carbon computing and sensible clean energy initiatives.

A MESSAGE FROM DEEPLEARNING.AI

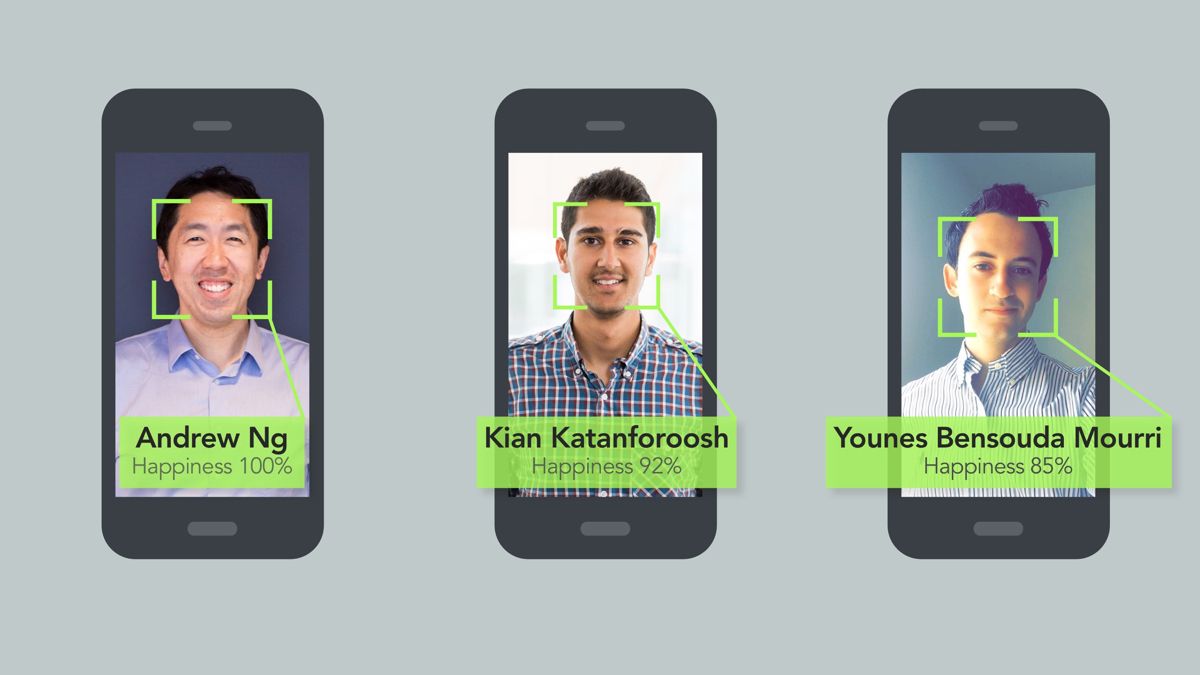

Detect how happy someone is based on their facial expression in the Deep Learning Specialization. Enroll now

Better Than GAN?

Generative adversarial networks can synthesize images to help train computer vision systems. But GANs are compute-hungry and don’t always produce realistic output. Now there’s a more efficient and true-to-life alternative.

What’s new: DeepMind introduces an upgrade to the Vector Quantized-Variational AutoEncoder it unveiled last year. VQ-VAE-2 generates images faster than GANs, with finer detail and class labels. An image recognizer trained exclusively on pictures from VQ-VAE-2 classified ImageNet data 15 percent more accurately than the same model trained on GAN-generated images.

How it works: VQ-VAE-2 is a variational autoencoder with modifications:

- A typical variational autoencoder represents input images as condensed continuous vectors and uses these representations to reconstruct the images. These compact representations can be randomly sampled during inference to create new images that look realistic.

- The first-generation VQ-VAE works the same way, but it maps encoder outputs to a set of closest representations from a “cookbook” rather than a continuous set of possibilities — the process known as quantization. The cookbook is trained jointly with the rest of the model.

- Quantization limits the encoder's power, but overall it creates a more stable task for the system to train on.

- VQ-VAE-2 splits the representation into two co-dependent processes to handle local and global image features.

- The decoder CNN uses both top and bottom representations to construct output images that combine both local and global features.

Why it matters: Although GANs have been improving at a rapid clip, VQ-VAE-2 generates better images using substantially less computation. It also produces more diverse output, making it better suited for data augmentation.

We’re thinking: Advanced generative models could drive advances in fields beyond computer vision. Generative models aren't yet widely used for data augmentation, but if such algorithms can help in small-data settings, they would be a boon to machine learning.Meanwhile, we’ll enjoy the pretty pictures.

Things Look Different Over There

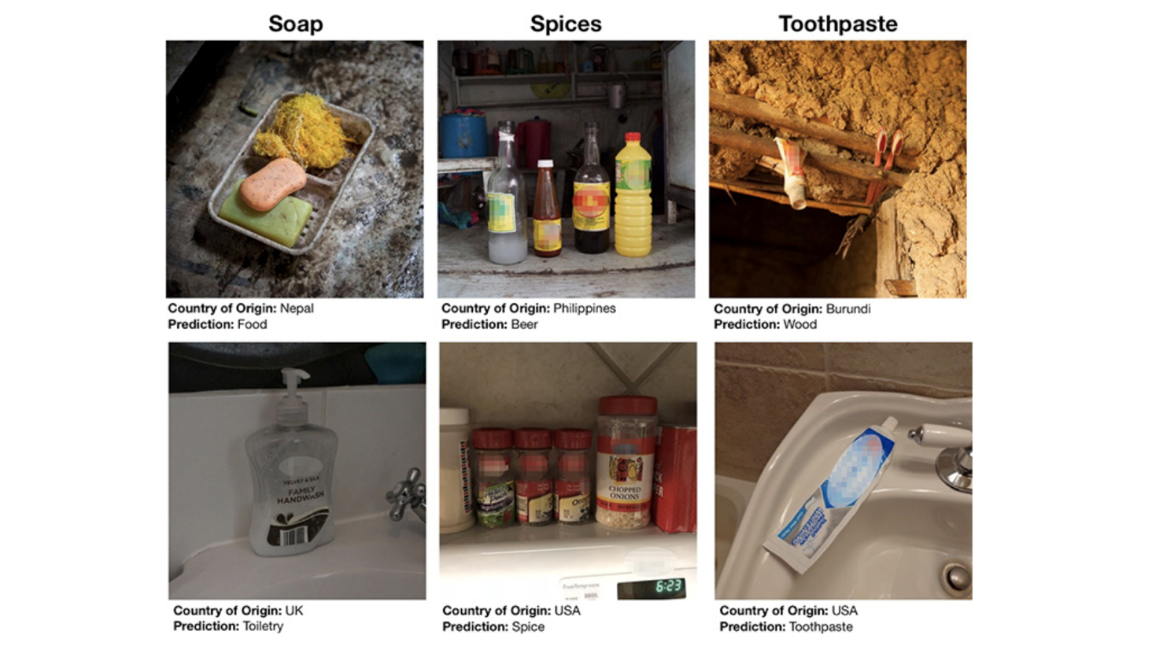

The most widely used image-recognition systems are better at identifying items from wealthy households than from poor ones.

What’s new: Facebook researchers tested object recognition systems on images from the Dollar Street corpus household scenes, ranked by income level, from 50 countries. Services from Amazon, Clarifai, Google, IBM, and Microsoft performed poorly on photos from several African and Asian countries, relative to their performance on images from Europe and North America. Facebook’s own was roughly 20 percent more accurate on photos from the wealthiest households than on those from the poorest, where even common items like soap may look very different.

Behind the news: Nearly all photos in ImageNet, Coco, and OpenImages come from Europe and North America, the researchers point out. So systems trained on those data sets are better at recognizing, say, a Western-style wedding than an Indian-style wedding. Moreover, photos labeled “wedding,” with their veiled brides and tuxedoed grooms, look very different from those labeled with the equivalent word in Hindi (शादी) with their bright-red accents. Systems designed around English labels may ignore relevant photos from elsewhere, and vice versa.

We're thinking: Bias in machine learning runs deep and manifests in unexpected ways, and the stakes can be especially high in applications like healthcare. There is no simple solution. Ideally, data sets would represent social values. Yet different parts of society hold different values, making it hard to define a single representative data distribution. We urge data set creators to examine ways in which their data may be skewed and work to reduce any biases.