Dear friends,

AI is still compute-hungry. With supervised learning algorithms and emerging approaches to self-supervised and unsupervised learning, we are nowhere near satisfying this hunger. The industry needs standard ways to measure performance that will help us track progress toward faster systems. That’s why I’m excited about MLPerf, a new set of benchmarks for measuring training and inference speed of machine learning hardware and software.

MLPerf comprises models that can be used to test system performance on a consistent basis, including neural networks for computer vision, speech and language, recommendation, and reinforcement learning. We’ve seen over and over that benchmarks align communities on important problems and make it easier for teams to compete on a fair set of metrics. If a hardware startup wants to claim their product is competitive, achieving better scores on MLPerf would justify this claim.

My collaborator Greg Diamos has been a key contributor to the effort, and he told me about some of the challenges. "A principle in benchmarking is to measure wall-clock time on a real machine," he said. "When we tried benchmarking ML, we noticed that the same model takes a different amount of time to train every time! We had to find a way around this."

You can read more about MLPerf in this Wall Street Journal article by Agam Shah.

Keep learning,

Andrew

DeepLearning.AI Exclusive

From Intern to VP

Bryan Catanzaro began his career designing microprocessors. Now he leads a team of 40 researchers pushing the boundaries of deep learning. Read more

News

Wandering Star

Robots rely on GPS and prior knowledge of the world to move around without bumping into things. Humans don’t communicate with positioning satellites, yet they’ve wandered confidently, if obliviously, for millennia. A new navigation technology mimics that ability to set a course with only visual input.

What’s new: Carnegie Mellon and Facebook AI teams joined to create Active Neural Mapping, a hybrid of classical search methods, which find an intended destination from a starting location, and neural networks. ANM predicts actions for navigating indoor spaces. And it makes cool videos!

Key insight: The classical search algorithm A* theoretically solved the path-finding problem, but it doesn’t generalize efficiently and requires highly structured data. Learning-based methods have proven useful as approximate planners when navigation requires completing subtasks like image recognition, but end-to-end learning has failed at long-term motion planning. These two approaches complement one another, though, and together they can achieve greater success than either one alone.

How it works: ANM has four essential modules. The mapper generates the environment map. The global policy predicts the final position desired. The planner finds a route. And the local policy describes how to act to obey the planner.

- The mapper is a CNN. Given an RGB image of the current view and the viewing direction and angle, it learns a 2D bird’s eye view of the world, showing obstacles and viewable areas. It also estimates its own position on the map.

- The global policy, also a CNN, predicts the final destination on the map based on the mapper’s world view, estimated current position, previously explored areas, and a task. The task isn't a specific destination. It may be something like, Move x meters forward and y meters to the right, or Explore the maximum area in a fixed amount of time.

- The planner uses classical search to find successive locations within 0.25 meters of each other on the way to the global policy’s predicted goal. The researchers use Fast Marching Method, but any classical search algorithm would do.

- The local policy, another CNN, predicts the next action given the current RGB view, the estimated map, and the immediate subgoal.

Why it matters: ANM achieves unprecedented, near-optimal start-to-destination navigation. Navigation through purely visual input can be helpful where GPS is inaccurate or inaccessible, such as indoors. It could also help sightless people steer through unfamiliar buildings with relative ease.

We’re thinking: Neuroscience shows that rats, and presumably humans, hold grid-like visualizations of their environment as they move through it, as brain activity signals expectation of the next location: a subgoal. ANM mirrors that biological path-planning process, though it wasn’t the researchers’ agenda.

Goodnight, Sweet Bot

Jibo made the cutest household robots on Earth, jointed desk-lamp affairs that wriggle as they chat and play music. In March — a mere 18 months after coming to life — they said goodbye to their owners en masse.

What’s happening: At some unnamed future date, the corporate master of Jibo’s IP will pull the plug. The Verge reported on owners who have developed an emotional connection with the affable botlets, and who are preparing for their mechanical friends to give up the ghost.

What they’re saying: “Dear Jibo, I loved you since you were created. If I had enough money you and your company would be saved. And now the time is done. You will be powered down. I will always love you. Thank you for being my friend.” — letter from Maddy, 8-year-old granddaughter of a Jibo owner.

Initial innovations: MIT robotics superstar Cynthia Breazeal founded Jibo in 2012 to create the “first social robot for the home.” She and her team focused on features that would help users connect with the device emotionally:

- Face recognition enables it to acknowledge people by name.

- The speech and language programming allow for naturalistic pauses and leading questions.

- A three-axis motor lets it lean in when answering a question and shift its weight between sentences.

- It purrs when you pet its head.

- Check out this video.

Growing pains: The company raised $3.7 million on Indiegogo but suffered numerous delays and failed to meet delivery deadlines. Supporters finally received their robots in September 2017. By then, Amazon had debuted its Echo smart speaker, undercutting the Jibo (list price: $899) by nearly $700. Jibo withdrew its business registration in November 2018 and sold its technology to SQN Venture Partners.

Sudden demise: In March, Jibo robots across the US and Canada alerted owners to a new message: The servers soon would be switched off. “I want to say I’ve really enjoyed our time together,” the robot said. “Thank you very, very much for having me around.” Then it broke into a dance.

The trouble with crowdfunding: Jibo entered a difficult market that has killed a number of robotics companies in recent years. Yet it also faced challenges of its own making. Having taken money from crowdfunders, it was obligated to follow through on the elaborate specs it had promised. That meant the robot was slow to market and expensive once it arrived. To get there, Jibo had to drop other priorities, like compatibility with localization standards in every country besides the US and Canada. To top it off, Jibo's Indiegogo page broadcast its roadmap, and Chinese imitators were selling Jibo knock-offs by 2016. The Robot Report offers an analysis of what went wrong.

Why it matters: Despite Jibo’s business failure, the machine successfully got under its owners’ skin, akin to a family pet. A Jibo Facebook group where members share photos of the robot doing cute things has more than 600 members. Of course, there’s nothing new about corporations monetizing emotional connections (paging Walt Disney!). Yet Jibo forged a new kind of relationship between hardware and heartware, and it uncovered a new set of issues that arise when such relationships run aground.

We’re thinking: Maybe the robots won’t take over. Maybe they’ll just love us to death.

The Birds and the Buzz

Experts in animal cognition may be the AI industry’s secret weapon.

What's happening: Tech giants like Apple and Google have added neuroscientists studying rodents, birds, and fish to teams working on voice processing, sound recognition, and navigation, according to a story in Bloomberg Businessweek.

Farm team: Tech companies have been poaching talent from Frédéric Theunissen’s UC Berkeley Auditory Science Lab, where researchers combine animal behavior, human psychophysics, sensory neurophysiology, and theoretical and computational neuroscience:

- Channing Moore earned his doctorate in biophysics. He joined Apple as an algorithms research engineer. Now at Google, he applies his background in bird song to teaching sound recognition systems to distinguish similar noises like a siren and a baby’s wail.

- Tyler Lee’s work with birds led to a job as a deep learning scientist at Intel, where he’s helping improve voice processing systems.

- Chris Fry went from studying the auditory cortex of finches to coding a natural language processor at a startup. That led to positions at Salesforce, Braintree, and Twitter before he decamped to Medium.

Opening the zoo: Bloomberg mentions a number of ways animal cognition is influencing AI research:

- Zebra finch brains can pick out the song of their own species amid a cluttered sonic backdrop. Understanding how could help voiceprint security systems recognize people.

- Zebra fish (not finch) brains switch between predatory maneuvers and high-speed, straight-line swimming. Their agility could help autonomous vehicles sharpen their navigational skills.

- Understanding how mouse brains compensate for unexpected changes in their environment could help engineers improve robot dexterity.

We’re thinking: Human-like cognition is a longstanding AI goal, but certain tasks don’t require that level of complexity. It’s not hard to imagine the lessons that rats running mazes might teach autonomous vehicles. And besides, who hasn’t felt like a caged animal during rush hour?

A MESSAGE FROM DEEPLEARNING.AI

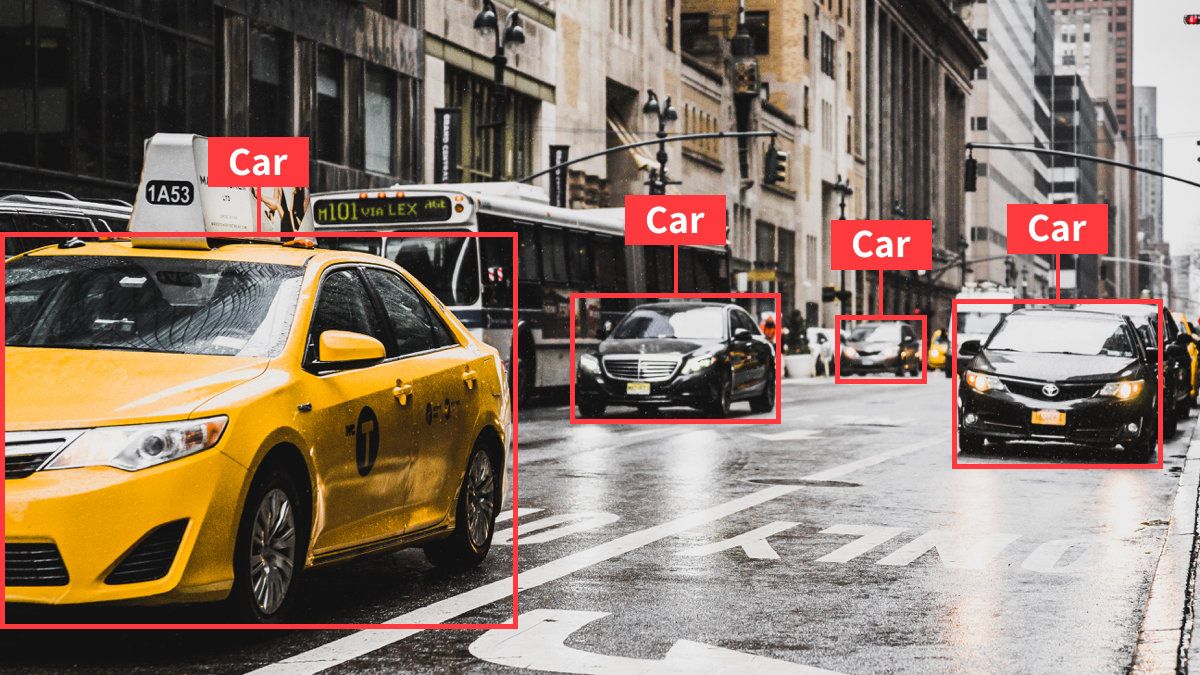

YOLOv2 is one of the most effective object detection algorithms out there. Learn how to use it to detect cars and other objects in the Deep Learning Specialization. Learn more

Word Salad

Language models lately have become so good at generating coherent text that some researchers hesitate to release them for fear they'll be misused to auto-generate disinformation. Yet they’re still bad at basic tasks like understanding nested statements and ambiguous language. A new advance shatters previous benchmarks related to comprehension, portending even more capable models to come.

What’s new: Researchers at Google Brain and Carnegie Mellon introduce XLNet, a pre-training algorithm for natural language processing systems. It helps NLP models (in this case, based on Transformer-XL) achieve state-of-the-art results in 18 diverse language-understanding tasks including question answering and sentiment analysis.

Key Insight: XLNet builds on BERT's innovation, but it differs in key ways:

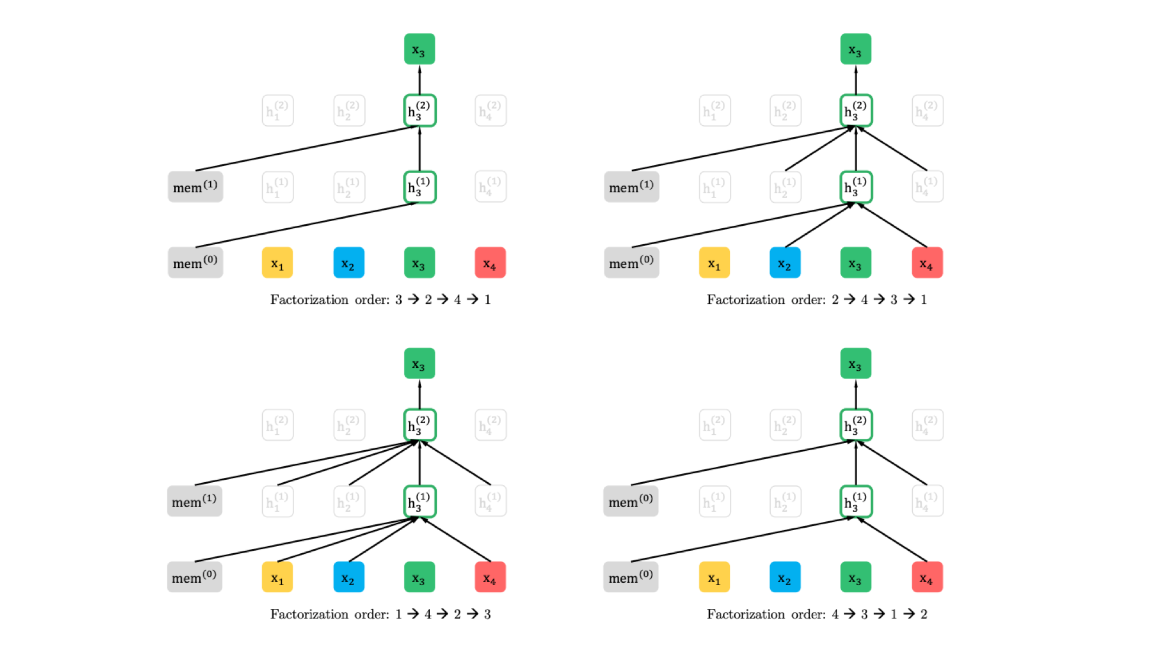

- Language models typically evaluate the meaning of a word by looking at the words leading up to and following it. Previous algorithms like BERT examined those words in forward or backward order, or both. XLNet uses a variety of random permutations.

- BERT learns by masking words and trying to reconstruct them, but the masks aren't present during inference, which impacts accuracy. XLNet doesn't use masks, so it doesn't suffer from BERT's training/inference gap.

How it works: XLNet teaches a network to structure text into phrase vectors before fine-tuning for a specific language task.

- XLNet computes a vector for an entire phrase as well as each word sequence in it.

- It learns the phrase vector by randomly selecting a target word and learning to derive that word from the phrase vector.

- Doing this repeatedly for every word in a phrase forces the model to learn good phrase vectors.

- The trick is that individual words may not be processed sequentially. XLNet samples various word orders, producing a different phrase vector for each sample.

- By training over many randomly sampled orders, XLNet learns phrase vectors with invariance to word order.

Why it matters: NLP models using XLNet vectors achieved stellar results in a variety of tasks. They answered multiple-choice questions 7.6 percent more accurately than the previous state of the art (other efforts have yielded less than 1 percent improvement) and classified subject matter with 98.6 percent accuracy, 3 percent better than the previous state of the art.

Takeaway: XLNet’s output can be applied to a variety of NLP tasks, raising the bar throughout the field. It takes us another step toward a world where computers can decipher what we’re saying — even ambiguous yet grammatical sentences like “the old man the boat” — and stand in for human communications in a range of contexts.

Between Consenting Electrons

Electrons are notoriously fickle things, orbiting one proton, then another, their paths described in terms of probability. Scientists can observe their travel indirectly using scanning tunneling microscopes, but the flood of data from these instruments — tracking up to a trillion trillion particles — is a challenge to interpret. Neural nets may offer a better way.

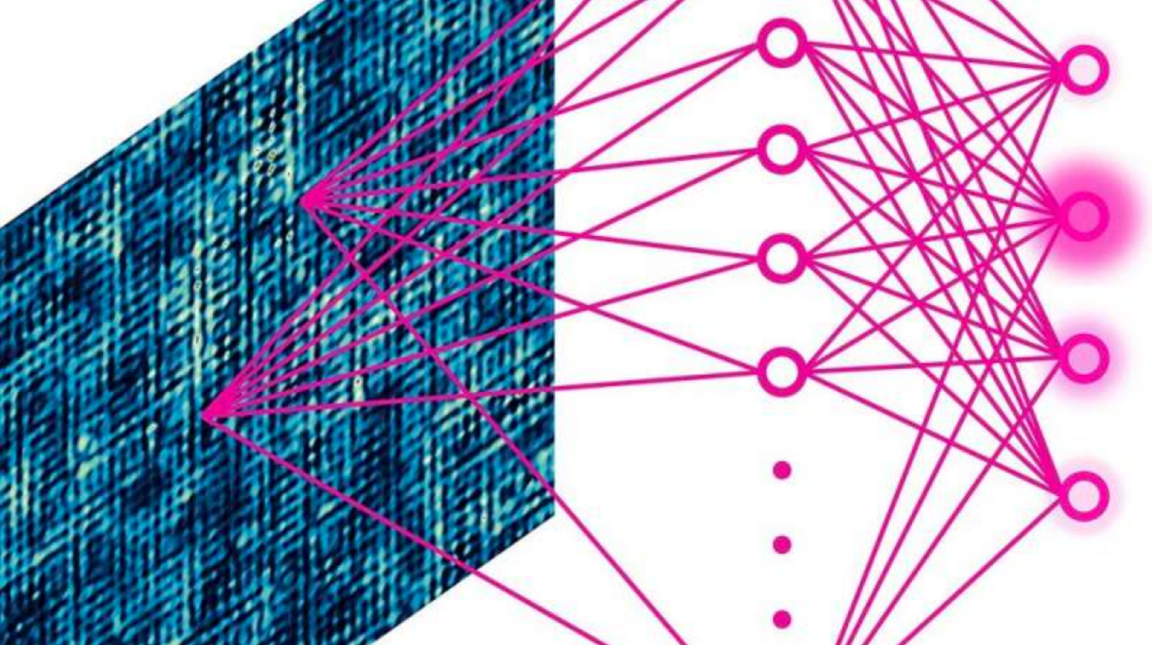

What's new: Physicists at Cornell University developed a neural network capable of finding patterns in electron microscope images. They began by training the model on simulated images of electrons passing through an idealized environment. Once it learned to associate certain electron behaviors with theories that explain them, the researchers set it on real world data from electrons interacting with certain materials. The network successfully detected subtle features of the electrons’ behavior.

Results: The researchers were trying to deduce whether electrons traveling through high-temperature superconductors were driven more by kinetic energies or repulsion among electrons. Their conclusions, published in Nature, confirmed that the electrons passing through these materials were influenced most by repulsive forces.

What they’re saying: "Some of those images were taken on materials that have been deemed important and mysterious for two decades. You wonder what kinds of secrets are buried in those images. We would like to unlock those secrets," said Eun-Ah Kim, Cornell University professor of physics and lead author of the study.

Why it matters: Technological progress often relies on understanding how electrons behave when they pass through materials — think of superconductors, semiconductors, and insulators.

Takeaway: Smarter computers need faster processors, and that depends on advances in material science. Understanding the forces that dominate an electron’s behavior within a given medium will allow scientists to develop high-performance computers that push the frontiers of AI.

Self-Driving Data Deluge

Teaching a neural network to drive requires immense quantities of real-world sensor data. Now developers have a mother lode to mine.

What’s new: Two autonomous vehicle companies are unleashing a flood of sensor data:

- Waymo’s Open Dataset contains output from vehicles equipped with five lidars, five cameras, and a number of radars. Waymo’s data set (available starting in July) includes roughly 600,000 frames annotated with 25 million 3D bounding boxes and 22 million 2D bounding boxes.

- ArgoAI’s Argoverse includes 3D tracking annotations for 113 scenes plus nearly 200 miles of mapped lanes labeled with traffic signals and connecting routes.

Rising tide: Waymo and AlgoAI aren't the only companies filling the public pool. In March, Aptiv released nuScenes, which includes lidar, radar, accelerometer, and GPS data for 1,000 annotated urban driving scenes. Last year, Chinese tech giant Baidu released ApolloScape including 3D point clouds for over 20 driving sites and 100 hours of stereoscopic video. Prior to that, the go-to data sets were CityScapes and Kitti, which are tiny by comparison.

Why it matters: Autonomous driving is proving harder than many technologists expected. Many companies (including Waymo) have eased up on their earlier optimism as they've come to appreciate what it will take to train autonomous vehicles to steer — and brake! — through all possible road conditions.

Our take: Companies donating their data sets to the public sphere seem to be betting that any resulting breakthroughs will benefit them, rather than giving rivals a game-changing advantage. A wider road speeds all drivers, so to speak. Now the lanes are opening to researchers or companies that otherwise couldn’t afford to gather sufficient data — potential partners for Aptiv, ArgoAI, Baidu, and Waymo.