Dear friends,

I chatted recently with MIT researcher Lex Fridman on his Artificial Intelligence podcast, where we discussed our experiences teaching deep learning. It was the most fun I’ve had in an interview lately, and you can watch the video here.

Lex asked me what machine learning concepts students struggle with most. While I don’t think that any particular concept is especially difficult, studying deep learning is a lot like studying math. No particular math concept — addition, subtraction, and so on — is harder than others, but it’s hard to understand division if you don’t already understand multiplication. Similarly, deep learning involves many concepts, such as LSTMs with Attention, that build on other concepts, like LSTMs, which in turn build on RNNs.

If you’re taking a course on deep learning and struggling with an advanced concept like how ResNets work, you might want to review earlier concepts like how a basic ConvNet works.

As deep learning matures, our community builds new ideas on top of old ones. This is great for progress, but unfortunately it also creates longer “prerequisite chains” for learning the material. Putting in extra effort to master the basics will help you when you get to more advanced topics.

Keep learning!

Andrew

News

OpenAI Under Fire

An icon of idealism in AI stands accused of letting its ambition eclipse its principles.

What’s new: Founded in 2015 to develop artificial general intelligence for the good of humankind, OpenAI swapped its ideals for cash, according to MIT Technology Review.

The critique: OpenAI began as a nonprofit committed to sharing its research, code, and patents. Despite a $1 billion initial commitment from Elon Musk, Peter Thiel, and others, the organization soon found it needed a lot more money to keep pace with corporate rivals like Google’s DeepMind. The pursuit of funding led it ever farther afield of its founding principles as it sought to attract further funding and talent, writes reporter Karen Hao.

- In early 2019, OpenAI set up a for-profit arm. The organization limited investors to a 100-fold return, which critics called a ploy to promote expectations of exaggerated returns.

- Soon after, the organization accepted a $1 billion investment from Microsoft. In return, OpenAI said Microsoft was its “preferred partner” for commercializing its research.

- Critics accuse the company of overstating its accomplishments and, in the case of the GPT-2 language model, overdramatizing them by withholding code so it wouldn’t be misused.

- Even as it seeks publicity, OpenAI has grown secretive. The company provided full access to early research such as 2016’s Gym reinforcement learning environment (pictured above). Recently, though, it has kept some projects quiet, making employees sign non-disclosure agreements and forbidding them from talking to the press.

Behind the news: Musk seconded the critique, adding that he doesn’t trust the company’s leadership to develop safe AI. The Tesla chief resigned from OpenAI’s board last year saying that Tesla’s autonomous driving research posed a conflict of interest.

Why it matters: OpenAI aimed to counterbalance corporate AI, promising a public-spirited approach to developing the technology. As the cost of basic research rises, that mission becomes increasingly important — and difficult to maintain.

We’re thinking: OpenAI’s team has been responsible for several important breakthroughs. We would be happy to see its employees and investors enjoy a great financial return. At the same time, sharing knowledge is crucial for developing beneficial applications, and exaggerated claims contribute to unrealistic expectations that can lead to public backlash. We hope that all AI organizations will support openness in research and keep hype to a minimum.

Surgical Speed-Up

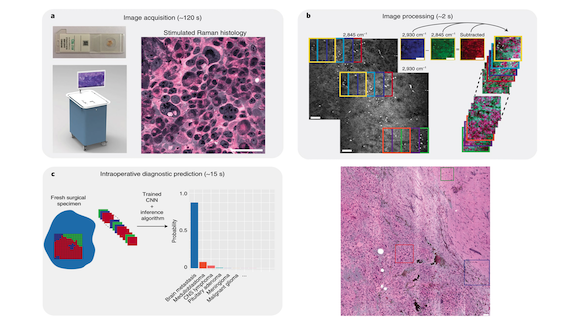

Every second counts when a patient’s skull is open in the operating room. A new technique based on deep learning can shorten some brain surgeries.

What’s new: During brain cancer operations, surgeons must stop in mid-operation for up to a half hour while a pathologist analyzes the tumor tissue. Led by neurosurgeon Todd Hollon, researchers at the University of Michigan and elsewhere developed a test powered by deep learning that diagnoses tumor samples in only a few minutes. (The paper is behind a paywall.)

Key insight: The authors trained a convolutional neural network (CNN) to diagnose tumor samples based on a rapid digital imaging technique known as stimulated Raman histology (SRH).

How it works: Previous approaches require transporting tumor tissue to a lab, running assays, and analyzing the results. The new test takes place within the operating room: A Raman spectroscope produces two SRH images that measure different properties of the sample, and a CNN classifies the images.

- The researchers fine-tuned the pretrained inception-resnet-v2 architecture on images from 415 patients. They trained the network to recognize 13 cancer types that account for around 90 percent of observed brain tumors.

- A preprocessing algorithm derives from each image a set of overlapping, high-resolution patches. This procedure creates a uniform, CNN-friendly image size; boosts the number of training samples; and eases parallel processing.

- The CNN predicts the tumor type of each patch, and the model chooses the diagnosis predicted most frequently in the patches.

Results: The researchers measured the CNN’s performance in a clinical trial (the first trial of a deep learning application in the operating room, they said). They evaluated tumor samples using the CNN as well as chemical tests and compared the results with clinical diagnoses. The CNN was 94.6 percent accurate, 0.7 percent better than the next-best method.

Why it matters: Chemical tests not only incur the risk of interrupting surgery, they also need to be interpreted by a pathologist who may not be readily available. The CNN renders a diagnosis directly, potentially increasing the number of facilities where such operations can be performed.

We’re thinking: Deep learning isn’t brain surgery. But brain surgery eventually might be deep learning.

. . . And I Approve of This Deepfake

Deepfake tech reared its digitally altered head in Indian politics, but with a twist: Altered images of a politician were produced by his own campaign.

What happened: On the eve of a legislative assembly election, the local branch of India’s national ruling party, the Bharatiya Janata Party, released a video of its local leader criticizing the opposition in a language he does not speak, according to Vice.

How it works: The BJP hired a local company, Ideaz Factory, to feature Delhi BJP president Manoj Tiwar in a video aimed at voters who speak Haryanvi, a Hindi dialect spoken by many of the city’s migrant workers.

- Tiwari recorded a clip in which he accuses his opponents, in Hindi, of breaking campaign promises. A voice actor translated his words into Haryanvi, and Ideaz Factory used a GAN to transfer the actor’s mouth and jaw motions onto Tiwari’s face. Experts at the Rochester Institute of Technology quoted by Vice believe the company based its model on Nvidia’s vid2vid.

- The party released the altered video to thousands of WhatsApp groups, reaching as many as 15 million people. Then it commissioned a second deepfake of Tiwari speaking English.

- The BJP later called the effort a “test,” not an official part of its social media campaign. Encouraged by what it characterized as positive reactions from viewers, Ideaz Factory hopes to apply its approach to upcoming elections in India and the U.S.

Why it matters: Deepfakery’s political debut in India was relatively benign: It helped the ruling party reach speakers of a minority language, possibly fooling them into thinking Tiwar spoke their tongue. Nonetheless, experts worry that politicians could use the technology to supercharge propaganda, and malefactors could use it to spur acts of violence.

We’re thinking: We urge teams that create deepfakes to clearly disclose their work and avoid misleading viewers. As it happened, the fakery didn’t help much: The BJP won only eight of 70 parliamentary seats in the Delhi election.

A MESSAGE FROM DEEPLEARNING.AI

Get your model running on iOS and Android devices. Take the first step in Course 2 of the TensorFlow: Data and Deployment Specialization. Enroll now

Imitation Learning in the Wild

Faster than a speeding skateboard! Able to dodge tall trees while chasing a dirt bike! It’s … an upgrade in the making from an innovative drone maker.

What’s new: Skydio makes drones that follow and film extreme sports enthusiasts as they skate, cycle, and scramble through all types of terrain. The company used imitation learning to develop a prototype autopilot model that avoids obstacles even while tracking targets at high speed.

How it works (and sometimes doesn’t): Six fisheye cameras give the drone a 360-degree view. Separate models map the surroundings, lock onto the target, predict the target’s path, and plan the drone’s trajectory. But the rule-based autopilot software has trouble picking out details like small tree branches and telephone wires from a distance. At high speeds, the drone sometimes has to dodge at the last moment lest it wind up like a speeder-riding stormtrooper in Return of the Jedi.

The next step: The researchers aim to build an end-to-end neural network capable of flying faster while avoiding obstacles more effectively than the current autopilot.

- They began by training a standard imitation learning algorithm on a large corpus of real-world data generated by the current system. But the algorithm didn’t generalize well to obstacles like tree branches. The researchers believe that’s because the open sky often provides a variety of obstacle-free flight paths, so even a good path often yields little useful learning.

- So they switched the learning signal from the distance between the learner’s and the autopilot’s actions to the autopilot’s reward function. They also raised the penalty for deviating from its decisions when flying through crowded environments. This forced the model to learn that trees in the distance would mean dangerous branches up close.

- The weighted penalty system trained the drone to follow a target effectively through lightly wooded terrain based on only three hours of training data.

Why it matters: The new software is still in development, and Skydio has not announced a release date. But the faster its drones can fly through crowded terrain without mishaps, the more its customers will be able to pull off gnarly stunts without losing their robot sidekick to a tree branch, and the more cool videos we’ll get to watch on YouTube.

We’re thinking: Now, if only Skydio could help the Empire’s stormtroopers improve their aim with blasters.

Deep Learning for Object Tracking

AI is good at tracking objects in two dimensions. A new model processes video from a camera with a depth sensor to predict how objects move through space.

What’s new: Led by Chen Wang, researchers from Stanford, Shanghai Jiao Tong University, and Nvidia built a system that tracks objects fast enough for a robot to react in real time: 6D-Pose Anchor-based Category-level Keypoint-tracker (6-PACK). Why 6D? Because three-dimensional objects in motion have six degrees of freedom: three for linear motion and three for rotation. You can see the system in action in this video.

Key insight: Rather than tracking absolution location, 6-PACK tracks an object’s change in position from video frame to video frame. Knowing its position in one frame makes it easier to find in the next.

How it works: The network’s training data is labeled with a six-dimensional vector that represents changes in an object’s location and orientation between frames. From that information, it learns to extract keypoints such as edges and corners, calculate changes in their positions, and extrapolate a new position. Objects in the training data are labeled with a category such as bowl or mug.

- The researchers identify an object’s center in the first frame.

- The model uses that information to generate a cloud of points representing the object.

- Based on the center and point cloud, the network generates a user-defined number of keypoints, essentially a 3D bounding box.

- In each successive frame, the model uses the previous keypoint locations to estimate the center roughly. An attention layer learns to find the center more precisely. Then the network updates the point cloud, and from there, the keypoints.

Results: Tested on a dataset of real-world videos, 6-PACK predicted object position and rotation within 5cm and 5 degrees in 33.3 percent of cases, versus the previous state of the art of 17 percent.

Why it matters: The ability to track objects as they move and rotate is essential to progress in robotics, both to manipulate things and to navigate around them.

We’re thinking: Object tracking algorithms and visual keypoints have a long history stretching beyond the 1960-vintage Kalman filter. Deep learning has come to dominate object recognition, and it’s good to see progress in tasks like tracking and optical flow.

Poultry in Motion

A top meat packer is counting its chickens with AI.

What’s new: Tyson Foods is using computer vision to track drumsticks, breasts, and thighs as they move through its processing plants, the Wall Street Journal reports.

How it works: Workers in slaughterhouses typically count the packages of poultry parts bound for market, then use hand signals to communicate the totals to another worker who enters the numbers into a computer. Tyson is replacing them with cameras that feed neural networks. The company has installed the system in three U.S. factories and plans to roll it out in its four other supermarket packaging factories by the end of the year.

- The camera system identifies cuts of meat along with inventory codes, while a scale weighs them.

- Workers double-check some of the system’s findings.

- Tyson says the system is 20 percent more accurate than the human-only method.

Behind the news: AI and robotics are coming home to roost in the poultry industry and beyond.

- The Tibot Spoutnic (shown above) is a Roomba-like robot that roams commercial chicken pens so the birds lay eggs in their nests rather than on the floor.

- Cargill is developing machine learning algorithms that monitor poultry farms for clucks indicating the birds are distressed or ill.

- A precision deboning bot from Georgia Tech uses computer vision to slice up chickens more efficiently than human butchers.

- AI is helping to manage other food animals as well: There’s face recognition for cows, activity trackers for pigs, and movement tracking to optimize feeding schedules for fish farms.

Why it matters: Food companies are looking to AI to drive down costs amid uncertain market conditions. Tyson’s profits took a hit last year. A recent industry analysis warns that the vagaries of feed prices, geopolitics, and avian flu make this year precarious as well.

We’re thinking: Consumers around the world are eating poultry. Any company hoping to meet demand had better not chicken out from the latest technology.