Dear friends,

My team at Landing AI just announced a new tool for quickly building computer vision models, using a technique we call Visual Prompting. It’s a lot of fun! I invite you to try it.

Visual Prompting takes ideas from text prompting — which has revolutionized natural language processing — and applies them to computer vision.

To build a text sentiment classifier, in the traditional machine learning workflow, you have to collect and label a training set, train a model, and deploy it before you start getting predictions. This process can take days or weeks.

In contrast, in the prompt-based machine learning workflow, you can write a text prompt and, by calling a large language model API, start making predictions in seconds or minutes.

- Traditional workflow: Collect and label -> Train -> Predict

- Prompt-based workflow: Prompt -> Predict

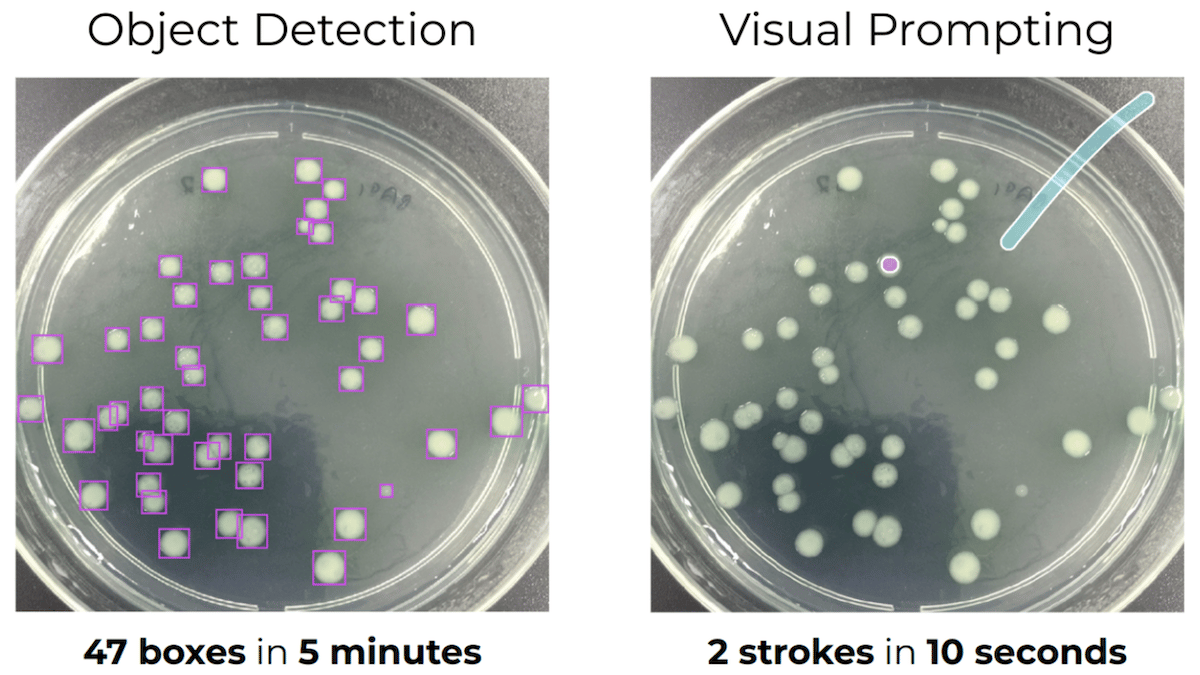

To explain how these ideas apply to computer vision, consider the task of recognizing cell colonies (which look like white blobs) in a petri dish, as shown in the image below. In the traditional machine learning workflow, using object detection, you would have to label all the cell colonies, train a model, and deploy it. This works, but it’s slow and tedious.

In contrast, with Visual Prompting, you can create a “visual prompt” in seconds by pointing out (by painting over) one or two cell colonies in the image and similarly pointing out the background region, and get a working model. It takes only a few seconds to (i) create the visual prompt and (ii) get a result. If you’re not satisfied with the initial model, you can edit the prompt (perhaps by labeling a few more cell colonies), check the results, and keep iterating until you’re satisfied with the model’s performance.

The resulting interaction feels like you’re having a conversation with the system. You’re guiding it by incrementally providing additional data in real time.

Since 2017, when the paper that introduced transformers was published, rapid innovation in text processing has transformed natural language models. The paper that introduced vision transformers arrived in 2020, and similarly it led to rapid innovation in vision. Large pretrained models based on vision transformers have reached a point where, given a simple visual prompt that only partially (but unambiguously) specifies a task, they can generalize well to new images.

We’re not the only ones exploring this theme. Exciting variations on Visual Prompting include Meta’s Segment Anything (SAM), which performs image segmentation, and approaches such as Generalist Painter, SegGPT, and prompting via inpainting.

You can watch a livestream of my presentation on Visual Prompting or read Landing AI’s blog post on this topic.

Text prompting reached an inflection point in 2020, when GPT-3 made it easy for developers to write a prompt and build a natural language processing model. I don’t know if computer vision has reached its GPT-3 moment, but we’re getting close. I’m excited by the research that’s moving us toward that moment, and I think Visual Prompting will be one key to getting us there.

Keep learning!

Andrew

News

Data Does Not Want to Be Free

Developers of language models will have to pay for access to troves of text data that they previously got for free.

What’s new: The discussion platform Reddit and question-and-answer site Stack Overflow announced plans to protect their data from being used to train large language models.

How it works: Both sites offer APIs that enable developers to scrape data, like posts and conversations, en masse. Soon they'll charge for access.

- Reddit updated its rules to bar anyone from using its data to train AI models without the company’s permission. CEO Steve Huffman told The New York Times he planned to charge for access with an exception for developers of applications that benefit Reddit users.

- Stack Overflow’s CEO Prashanth Chandrasekar said that using the site’s data to train machine learning models violates the company’s terms of use, which state that developers must clearly credit both the site and users who created the data. The company plans to impose a paywall, pricing or other details to be determined.

What they’re saying: “Community platforms that fuel LLMs absolutely should be compensated for their contributions so that companies like us can reinvest back into our communities to continue to make them thrive,” Chandrasekar told Wired.

Behind the news: In February, Twitter started charging up to $42,000 monthly for use of its API. That and subsequent API closures are part of a gathering backlash against the AI community’s longstanding practice of training models on data scraped from the web. This use is at issue in ongoing lawsuits. Last week a collective of major news publishers stated that training AI on text licensed from them violates their intellectual property rights.

Why it matters: Although data has always come at a cost, the price of some corpora is on the rise. Discussion sites like Reddit are important repositories of conversation, and text from Stack Overflow has been instrumental in helping to train language models to write computer code. The legal status of existing datasets and models is undetermined, and future access to data depends on legal and commercial agreements that have yet to be negotiated.

We’re thinking: It’s understandable that companies watching the generative AI explosion want a slice of the pie and worry that users might leave them for a chatbot trained on data scraped from their own sites. Still, we suspect that charging for data will put smaller groups with fewer resources at a disadvantage, further concentrating power among a handful of wealthy companies.

Conversational Search, Google Style

Google’s response to Microsoft’s GPT-4-enhanced Bing became a little clearer.

What’s new: Anonymous insiders leaked details of Project Magi, the search giant’s near-term effort to enhance its search engine with automated conversation, The New York Times reported. They described upcoming features, but not the models behind them.

How it works: Nearly 160 engineers are working on the project.

- The updated search engine will serve ads along with conversational responses, which include generating computer code. For example, if a user searches for shoes, the search engine will deliver ads as well as organic links. If a user asks for a Python program, it will generate code followed by an ad.

- Searchalong, a chatbot for Google’s Chrome browser, will respond to queries by searching the web.

- Employees are testing the features internally ahead of a limited public release next month. They’ll be available to one million U.S. users initially and reach 30 million by the end of the year.

- Longer-term plans, which are not considered part of Project Magi, include a new search engine powered by the Bard chatbot.

Beyond search: The company is developing AI-powered features for other parts of its business as well. These include an image generation tool called GIFI for Google Images and a chatbot called Tivoli Tutor for learning languages.

Behind the news: Google has been scrambling to integrate AI features. The company recently combined Brain and DeepMind into a single unit to accelerate AI research and development. In March, rumors emerged that Samsung, which pays Google substantial licensing revenue to use its search engine in mobile devices, was considering a switch to Bing. The previous month, Bard made factual errors during a public demo, which contributed to an 8 percent drop in Google’s share price. These moves followed a December 2022 “code red” response to Microsoft’s plans to upgrade Bing with conversational technology from OpenAI.

Why it matters: When it comes to finding information, conversational AI is a powerful addition to, and possibly a replacement for, web search. Google, as the market leader, can’t wait to find out. The ideas Google and its competitors implement in coming months will set the mold for conversational user interfaces in search and beyond.

We’re thinking: Should chatbots be integrated with search or designed as separate products? Microsoft and Google are taking different approaches. Microsoft’s conversational model is deeply integrated with Bing search, while Google's Bard currently stands alone. Given the differences between chat and search, there’s a case to be made for keeping chatbots distinct from search engines.

A MESSAGE FROM DEEPLEARNING.AI

Are you ready to turn your passion into practice? The new AI for Good Specialization will empower you to use machine learning and data science for positive social and environmental impact! Join the waitlist to be the first to enroll

Everybody Must Get Cloned

Tech-savvy music fans who are hungry for new recordings aren’t waiting for their favorite artists to make them.

What’s new: Social media networks exploded last week with AI-driven facsimiles of chart-topping musicians. A hiphop song with AI-generated vocals in the styles of Drake and The Weeknd racked up tens of millions of listens before it was taken down. Soundalikes of Britpop stars Oasis, rapper Eminem, and Sixties stalwarts The Beach Boys also captured attention.

How it works: These productions feature songs composed and performed in the old-fashioned way overlaid with celebrity-soundalike vocals generated by voice-cloning models. Some musicians revealed their methods.

- The first step is to acquire between a few minutes and several hours’ worth of audio featuring the singer’s voice in isolation. A demixing model can be used to extract vocal tracks from commercial productions. Popular choices include Demucs3, Splitter, and the web service lalal.ai.

- The dataset trains a voice cloning model to replicate the singer’s tone color, or timbre. Popular models include Soft Voice Cloning VITS, Respeecher, and Murf.ai.

- Then it’s time to record a new vocal performance.

- Given the new vocal performance, the voice cloning model generates a vocal track by mapping the timbre of the voice it trained to the performance’s pitch and phrasing.

- The last step is to mix the generated vocal with backing instruments. This generally involves a digital audio workstation such as the free Audacity, Ableton Live, and Logic Pro.

Behind the news: The trend toward AI emulations of established artists has been building for some time. In 2021, Lost Tapes of the 27 Club used an unspecified AI method to produce music in the style of artists who died young including Jimi Hendrix, Kurt Cobain, and Amy Winehouse. The previous year, OpenAI demonstrated Jukebox, a system that generated recordings in the style of many popular artists.

Yes, but: The record industry is moving to defend its business against such audio fakery (or tributes, depending on how you want to view them). Universal Music Group, which controls about a third of the global music market, recently pushed streaming services to block AI developers from scraping musical data or posting songs in the styles of established artists.

Why it matters: Every new generation of technology brings new tools to challenge the record industry’s control over music distribution. The 1970s brought audio cassettes and the ability to cheaply copy music, the 1980s brought sampling, the 1990s and 2000s brought remixes and mashups. Today AI is posing new challenges. Not everyone in the music industry is against these AI copycats: The electronic artist Grimes said she would share royalties with anyone who emulates her voice, and Oasis’ former lead singer apparently enjoyed the AI-powered imitation.

We’re thinking: Musicians who embrace AI will open new creative pathways, but we have faith that traditional musicianship will endure. After all, photography didn’t kill painting. Just as photography pushed painters toward abstraction, AI may spur conventional musicians in exciting, new directions.

Image Generators Copy Training Data

We know that image generators create wonderful original works, but do they sometimes replicate their training data? Recent work found that replication does occur.

What's new: Gowthami Somepalli and colleagues at University of Maryland devised a method that spots instances of image generators copying from their training sets, from entire images to isolated objects, with minor variations.

Key insight: A common way to detect similarity between images is to produce embeddings of them and compute the dot product between embeddings. High dot product values indicate similar images. However, while this method detects large-scale similarities, it can fail to detect local ones. To detect a small area shared by two images, one strategy is to split apart their embeddings, compute the dot product between the pieces, and look for high values.

How it works: The authors (i) trained image generators, (ii) generated images, and (iii) produced embeddings of those images as well as the training sets. They (iv) broke the embeddings into chunks and (v) detected duplications by comparing embeddings of the generated images with those of the training images.

- First the authors looked for models whose embeddings were effective in detecting duplications. They tested 10 pretrained computer vision architectures on a group of five datasets for image retrieval — a task selected because the training sets include duplications — and five synthetic datasets that contain replications. The three models whose embeddings revealed duplications most effectively were Swin, DINO, and SSCD, all of which were pretrained on ImageNet.

- Next they generated images. They trained a diffusion model on images drawn from datasets of flowers and faces. They trained the model on subsets of varying sizes: smaller (100 to 300 examples), medium (roughly 1,000 to 3,000), and larger (around 8,200).

- Swin, DINO, and SSCD produced embeddings of the images in the training set and generated images. The authors split these embeddings into many smaller, evenly sized chunks. To calculate the similarity scores, they computed the dot product between corresponding pairs of chunks (that is, the nth chunk representing a training image and the nth chunk representing a generated image). The score was the maximum value of the dot products.

- To test their method under conditions closer to real-world use, the authors performed similar experiments on a pretrained Stable Diffusion. They generated 9,000 images from 9,000 captions chosen at random from the Aesthetics subset of LAION. They produced embeddings of the generated images and the LAION Aesthetics. They split these embeddings and compared their dot products.

Results: For each generated image, the authors found the 20 most similar images in the training set (that is, those whose fragmented embeddings yielded the highest dot products). Inspecting those images, they determined that the diffusion model sometimes copied elements from the training set. They plotted histograms of the similarity between images within a training set and the similarity between training images and generated images. The more the two histograms overlapped, the fewer the replications they expected to find. Both histograms and visual inspection indicated that models trained on smaller datasets contained more replications. However, on tests with Stable Diffusion, 1.88 percent of generated images had a similarity score greater than 0.5. Above that threshold, the authors observed obvious replications — despite that model’s pretraining on a large dataset.

Why it matters: Does training an image generator on artworks without permission from the copyright holder violate the copyright? If the image generator literally copies the work, then the answer would seem to be “yes.” Such issues are being tested in court. This work moves the discussion forward by proposing a more sensitive measure of similarity between training and generated images.

We're thinking: Picasso allegedly said that good artists borrow while great artists steal. . . .

Data Points

Elon Musk founded a presumed AI company called X.AI

X.AI Corp was incorporated in Nevada last month and Musk is its only listed director. No further details are available. (The Wall Street Journal)

AI-powered technique fixes Python bugs

A developer built Wolverine, a program based on GPT-4 that automatically finds and fixes bugs in real time. The code is available on GitHub. (Ars Technica)

Startup Humane showcased upcoming wearable AI assistant

A leaked clip from a TED talk by AI startup Humane cofounder Imran Chaudhri revealed a demo of a wearable device that performs the functions of a smartphone, voice assistant, and possibly other equipment . It performs tasks such as real-time speech translation and audio recaps. (Inverse)

Google merged its two main AI research units

The merged organization, called Google DeepMind, combines Google Brain and DeepMind teams. The reorg is expected to accelerate progress on the tech giant’s AI projects. (The Wall Street Journal)

Magazine published AI-generated “interview” with Michael Schumacher

German magazine Die Aktuelle promoted an exclusive interview with the F1 ex-driver and then revealed the conversation was produced by an AI chatbot. (ESPN)

SnapChat upgraded its AI chatbot

The chatbot, called My AI, is now able to generate images, recommend lenses, and suggest nearby places to visit. (Reuters)

Stability AI launched an open source language model

The company behind Stable Difussion released StableLM, a model aimed to contend with ChatGPT. The company released few details about the model. Its alpha version is available in 3 billion and 7 billion parameters. (Bloomberg and Stability.AI)

Microsoft is designing an AI chip

The chip, called Athena, has been in development since 2019. It’s expected to reduce costs associated with chips from external suppliers. (Silicon Angle)

Research: Independent researchers released alternative to ChatGPT Open Assistant is an open source alternative to commercial chatbots. Organized by the German nonprofit LAION, the development effort aims to democratize access to large language models. Try it here. (Open Assistant)