Dear friends,

Last week, Facebook’s parent company Meta released a demo of Galactica, a large language model trained on 48 million scientific articles. Two days later, amid controversy regarding the model’s potential to generate false or misleading articles, the company withdrew it.

Is Galactica dangerous? How should researchers, as well as the broader AI community, approach such developments?

Michael Black, director of the Max Planck Institute for Intelligent Systems, raised concern about Galactica’s potential for harm by generating seemingly authoritative scientific papers that are factually bonkers. Meta chief AI scientist Yann LeCun vigorously defended the model. He pointed out that, despite worries that people might misuse large language models (LLMs), it largely hasn’t happened.

At the risk of offending both sides, let me share my take.

- I support the Galactica researchers. Their scientific work on large language models is technically interesting and impressive. Their model does well on tasks such as mathematical reasoning and answering multiple-choice questions.

- When a technology shows potential to cause significant harm, it’s important to carefully assess the likely benefits against the likely harm. One problem with the way Galactica was released is that we don’t yet have a robust framework for understanding of the balance of benefit versus harm for this model, and different people have very different opinions. Reading through the paper, I see potential for exciting use cases. I also see risk of large-scale fakery that could cause harm. While I support the technical work, I would prefer that the demo had been released only after a more thorough assessment.

- Prior to a careful analysis of benefit versus harm, I would not recommend “move fast and break things” as a recipe for releasing any product with potential for significant harm. I would love to see more extensive work — perhaps through limited-access trials — that validates the product’s utility to third parties, explores and develops ways to ameliorate harm, and documents this thinking clearly.

- That said, I would also love to see less vitriol toward researchers who are trying to do their best. People will differ on the best path forward, and all of us sometimes will be right and sometimes will be wrong. I believe the Meta researchers are trying to do their best. Whether we agree or disagree with their approach, I hope we’ll treat them with respect.

- Part of the disagreement likely stemmed from widespread distrust of Meta, where a focus on maximizing user engagement has contributed to social polarization and spread of disinformation. If a lesser-known or more-trusted company had released Galactica, I imagine that it would have had more leeway. For instance, Stability AI released its Stable Diffusion text-to-image model with few safeguards. The company faced little criticism, and so far the model has spurred great creativity and little harm. I don’t think this is necessarily an unfair way to approach companies. A company’s track record does matter. Considering the comparatively large resources big companies can use to drive widespread awareness and adoption of new products, it’s reasonable to hold them to a higher standard.

- The authors withdrew the model shortly after the controversy arose. Kudos to them for acting in good faith and responding quickly to the community’s concerns.

When it comes to building language models that generate more factually accurate output, the technical path forward is not yet clear. LLMs are trained to maximize the likelihood of text in their training set. This leads them to generate text that sounds plausible — but a LLM that makes up facts can also perform well on this training objective.

Some engineers (including the Galactica’s team) have proposed that LLMs could be an alternative to search engines. For example, instead of using search to find out the distance to the Moon, why not pose the question as a prompt to a language model and let it answer? Unfortunately, the maximum-likelihood objective is not well aligned with the goal of providing factually accurate information. To make LLMs better at conveying facts, research remains to be done on alternative training objectives or, more likely, model architectures that optimize for factual accuracy rather than likelihood.

Whether a tool like Galactica will be more helpful or harmful to society is not yet clear to me. There will be bumps in the rollout of any powerful technology. The AI community has produced racist algorithms, toxic chatbots, and other problematic systems, and each was a chance to learn from the incident and get better. Let’s continue to work together as a community, get through the bumps with respect and support for one another, and keep building software that helps people.

Keep learning!

Andrew

News

Creatives Fight Back

Artists are rebelling against AI-driven imitation.

What’s new: DeviantArt, an online community where artists display and sell their work and marketplace for digital art, launched DreamUp, a text-to-image generator that aims to help artists thwart attempts to imitate their styles or works.

How it works: DreamUp is a vanilla implementation of the open source Stable Diffusion text-to-image generator.

- Artists can fill out a form that adds their name, aliases, and named creations to a list of blocked prompt phrases.

- DreamUp labels all output images as AI-generated. Users who upload the system’s output to DeviantArt are required to credit artists whose work influenced it. DeviantArt users can report images that they believe imitate an artist’s style. In unclear cases, DeviantArt will ask the artist in question to judge.

- DeviantArt offers five free prompts a month. Members, who pay up to $14.95 for a monthly subscription, get 300 prompts a month or pay up to $0.20 per prompt.

Opting out: Stable Diffusion was trained on images scraped from the web including works from DeviantArt. Upon its release, some artists objected to the model’s ability to replicate their style via prompts like, “in the style of ____.”

- DeviantArt opened fresh wounds upon releasing DreamUp by offering members the opportunity to add HTML and HTTP tags that specify that work is not to be included in future training datasets — but only if they opted in.

- Members objected to having to opt in to mark their works as off limits to AI developers. DeviantArt responded by adding the tags to all uploaded images by default.

- It’s not clear what consequences would follow if an AI developer were to train a learning algorithm on such tagged images.

Behind the news: AI’s increasing ability to mimic the styles of individual artists has become a flashpoint between engineers and artists. When acclaimed artist Kim Jung Gi died in early October, within one day a former game developer released a model trained to produce works in his style. While the developer justified the work “as an homage,” responses included not only criticism and insults but also threats of violence. Such comments, one commenter noted, were part of a recent rise in “extremely violent rhetoric directed at the AI art community.”

Why it matters: Generative AI is attracting attention and funding, but the ethics of training and using such systems are still coming into focus. For instance, lawyers are preparing to argue that GitHub’s CoPilot code-generation system, which was trained on open-source code, violates open-source licenses by improperly crediting coders for their work. The outcome may resolve some uncertainty about how to credit a generative model’s output — but it seems unlikely to address issues of permission and compensation.

We’re thinking: Artists who have devoted years to developing a distinctive style are justifiably alarmed to see machines crank out imitations of their work. Some kind of protection against copycats is only fair. For the time being, though, the limit of fair use in training and using AI models remains an open question.

Built to Scale

A new computing cluster delivers more bang per chip.

What’s new: Cerebras, one of several startups vying to supply the market for specialized AI chips, unveiled Andromeda, a supercomputer based on its processors. Unlike conventional clusters, which incur data bottlenecks as processors are added, the system’s processing speed rises linearly with additional processors.

How it works: Andromeda comprises 16 Cerebras CS-2 Wafer Scale Engine chips. Each chip holds 850,000 processing cores (more than 100 times the number found on an Nvidia A100) on a silicon disc that measures 21.5 centimeters across.

- The cluster can execute more than 1 exascale floating point operation per second, which is comparable to the world’s fastest supercomputer, Oak Ridge National Laboratory’s Frontier.

- A memory extension called MemoryX stores model weights off-system and streams them to the processors as needed.

- Up to 16 users can access Andromeda simultaneously, and they can specify how many of the system’s 16 processors they wish to use.

- Several companies are using Andromeda for research including rival chip designer AMD and natural language processing startup Jasper AI.

Speed tests: Scientists at Argonne National Laboratory used the system to train GenSLM language models in several sizes. Increasing the number of processors from one to four boosted throughput nearly linearly while training models of 123 million parameters and 1.3 billion parameters. Going from one to four chips also cut the smaller model’s training time from 4.1 to 2.4 hours and cut the larger model’s training time to 15.6 to 10.4 hours.

Behind the news: As interest rates rise, AI chip startups are facing headwinds in raising enough capital to support their often huge expenses.

- Texas-based Mythic, which focused on analog chips for AI applications, ran out of money earlier this month.

- Graphcore, based in the UK, lost $1 billion value in October after Microsoft canceled a lucrative deal.

- Also in October, Israeli chip designer Habana Labs, which Intel acquired in 2019, laid off 10 percent of its workforce.

Why it matters: Neural networks have breached the 1 trillion-parameters mark, and numbers one or two orders of magnitude greater may be close at hand. More efficient compute clusters could train those models more quickly and consume less energy doing it.

We’re thinking: For most current machine learning models, the usual GPUs should be fine. Cerebras specializes in models and compute loads too large for a handful of GPUs in a single server — an interesting business as model sizes balloon.

A MESSAGE FROM DEEPLEARNING.AI

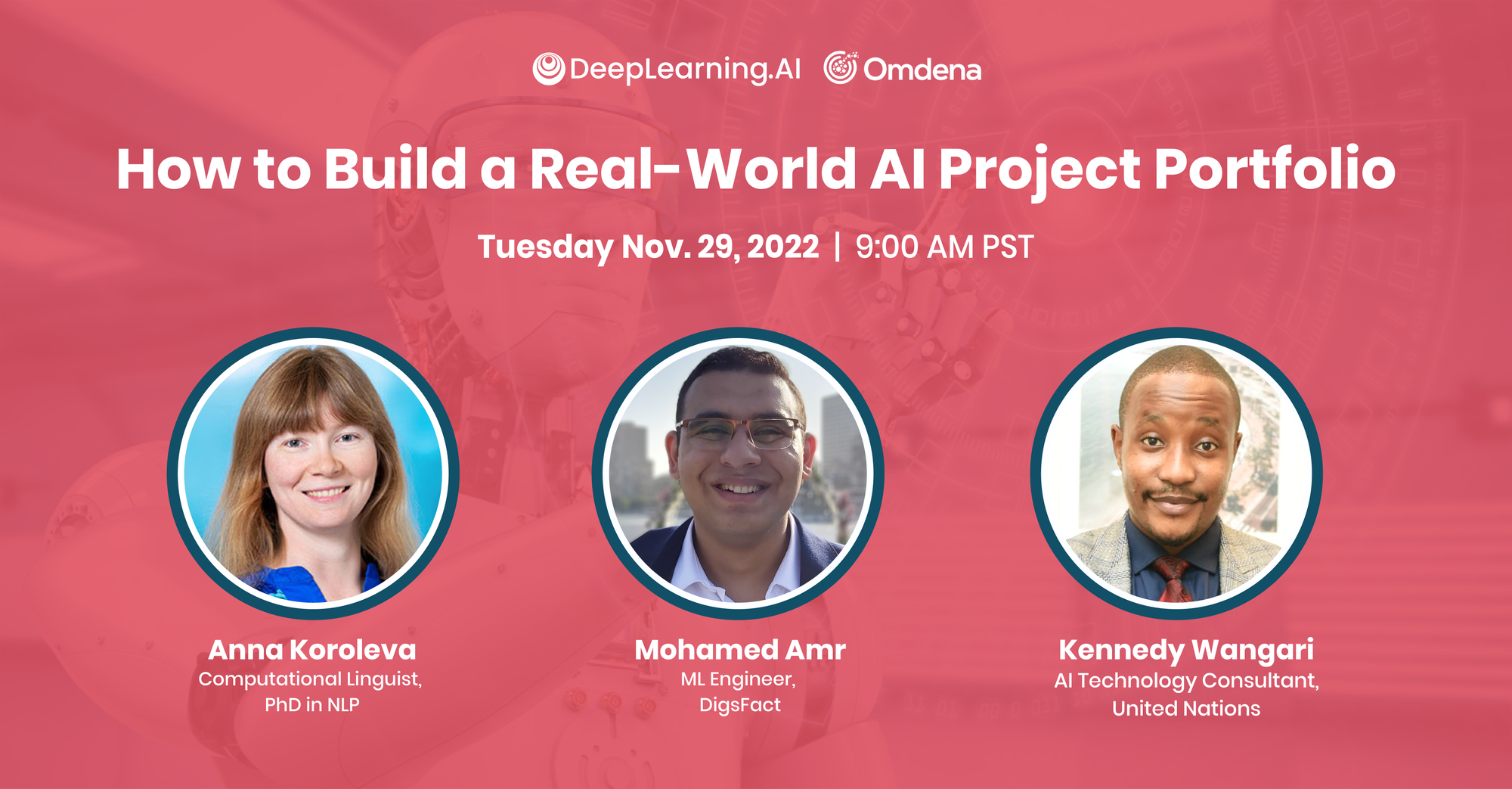

When should you start building an AI project portfolio? What kinds of projects should it include? Get answers from AI practitioners during “How to Build a Real-World AI Project Portfolio” on November 29, 2022, at 9 a.m. Pacific Standard Time. Register now!

Champion Model Is No Go

A new algorithm defeated a championship-winning Go model using moves that even a middling human player could counter.

What’s new: Researchers at MIT, UC Berkeley, and the Fund for Alignment Research trained a model to defeat KataGo, an open source Go-playing system that has beaten top human players.

How it works: The authors’ system tricks KataGo into deciding prematurely that it has won, causing it to end a game when the authors’ model is in a winning position.

- The authors trained a convolutional neural network to play Go using a modified version of a reinforcement learning method commonly used to train game-playing models. In the usual approach, the model plays itself and learns from all moves. In the authors’ version, the model played against a fixed KataGo model and learned only from its own moves, learning to exploit holes in KataGo’s strategy rather than becoming a conventionally savvy player.

- The authors’ model forecasted its next moves using its own model, and it forecasted KataGo’s likely responses to those moves using KataGo’s model. It combined the forecasts to determine its next action. (KataGo can be configured to perform similar forecasting, but the authors didn’t use this capability while training their model.)

- During training, once the model had won 50 percent of games, the authors increased the difficulty by pitting it against a version of KataGo that had been trained longer.

Results: The model’s winning strategy involved taking control of a corner of the board and adding a few easy-to-capture pieces outside that area.

- This strategy enabled a version that predicted 600 moves ahead to win more than 99 percent of games against a KataGo that didn’t look ahead (which ranks among the top 100 European players).

- A version that predicted the next 4,096 moves won 54 percent of games against a KataGo that looked 64 moves ahead (which ranks among the top 20 players worldwide).

- The model lost to a naive human player who hadn’t played Go prior to undertaking the research project.

- However, the naive player wasn’t able to defeat KataGo using the model’s strategy. This suggests that the strategy was less critical to the model’s victory than exploiting specific flaws in KataGo.

Why it matters: This work is a helpful reminder that neural networks are brittle, particularly to adversarial attacks that take advantage of a specific system’s idiosyncrasies. Even in the limited context of a game board, a model that achieves superhuman performance can be defeated by a simple — but unusual — strategy.

We’re thinking: AI practitioners perform exploratory data analysis and address potential attacks, but vulnerabilities always remain. Approaches like the one in this paper offer a way to find them.

When Trees Outdo Neural Networks

While neural networks perform well on image, text, and audio datasets, they fall behind decision trees and their variations for tabular datasets. New research looked into why.

What’s new: Léo Grinsztajn, Edouard Oyallon, and Gaël Varoquaux at Inria Saclay Centre and Sorbonne University trained a variety of neural networks and tree models on tabular datasets. Performance on their tabular data learning benchmark revealed dataset characteristics that favor each class of models.

Key insight: Previous work found that no single neural network architecture performed best on a variety of tabular datasets, but a tree-based approach performed better than any neural network on most of them. Training and testing different models on many permutations of the data can reveal principles to guide the choice of architecture for any given dataset.

How it works: The authors compiled datasets, trained a variety of models (using a variety of hyperparameters), and evaluated their performance. Then they applied transformations to the data, retrained the models, and tested them again to see how the transformations affected model performance.

- The authors collected 45 tabular datasets useful for both classification problems like predicting increase/decrease in electricity prices and regression problems such as estimating housing prices. Each dataset comprised more than 3,000 real-world examples and resisted simple modeling (that is, logistic or linear regression models trained on them performed 5 percent worse than a ResNet or gradient boosting trees).

- The authors trained tree-based models (random forests, gradient boosting machines, XGBoost, and various ensembles) and deep-learning-based models (vanilla neural network, ResNet, and two Transformer-based models). They trained each model 400 times, searching randomly through a predefined hyperparameter space. They evaluated classification performance according to test-set accuracy and regression models according to R2, which measures how well a model estimates the ground-truth data.

- In one transformation of the data, they used a random forest model to rank the importance of a dataset’s features and trained models on various proportions of informative versus uninformative features. In another, they smoothed labels like 0 or 1 into labels like .2 or .8.

Results: Averaged across all tasks, the best tree models performed 20 percent to 30 percent better than the best deep learning models. ResNets fell even farther behind trees and transformers as the number of uninformative features rose. In another experiment, training on smoothed labels degraded the performance of trees more than that of neural networks, which suggests that tree-based methods are better at learning irregular mapping of training data to labels.

Why it matters: Deep learning isn’t the best approach to all datasets and problems. If you have tabular data, give trees a try!

We’re thinking: The authors trained their models on datasets of 10,000 or 50,000 training examples. Smaller or larger datasets may have yielded different results.