Dear friends,

We just launched a Data-Centric AI Resource Hub to help you improve the performance of AI systems by systematically engineering the underlying data. It offers new articles by Nvidia director of machine learning research Anima Anandkumar, Stanford computer science professor Michael Bernstein, and Google Brain director of engineering D. Sculley. It also includes talks from the NeurIPS Data-Centric AI Workshop that was held in December. We’ll be adding more helpful articles and videos in coming months.

Working effectively with human labelers is a key part of Data-Centric AI. My friend Michael Bernstein is an expert in human-computer interface (HCI), a discipline that offers many insights for empowering labelers. His article explains some of the most important ones.

For example, given a task in computer vision, natural language processing, or speech recognition, it’s common to ask several crowdsourced labelers to annotate the same example and take the mean or majority-vote label. Many clever ideas have been proposed to improve the labeling process, such as testing labeler accuracy, developing novel voting mechanisms, and routing examples to labelers in sophisticated ways.

|

Surprisingly, Michael has found that it's often better to invest in hiring and training a few annotators than to focus on improving the process. Alternatively, the best process may be one that enables you to build a small team of skilled labelers.

Working with a smaller, committed team also makes it easier to discover and fix ambiguities in your labeling instructions. Michael writes, “When something goes wrong, your reactions should be, ‘What did I do wrong in communicating my intent?,’ not, ‘Why weren’t they paying attention?’”

Every machine learning engineer and data scientist can take advantage of Data-Centric AI techniques. And, because the data-centric approach changes the workflow of AI development, software engineers and product managers can also benefit. So please visit the Data-Centric AI Resource Hub, and tell your friends and colleagues about it, too.

Keep learning!

Andrew

News

|

Fast and Daring Wins the Race

Armchair speed demons have a new nemesis.

What’s new: Peter Wurman and a team at Sony developed Gran Turismo Sophy (GT Sophy), a reinforcement learning model that defeated human champions of Gran Turismo Sport, a PlayStation game that simulates auto races right down to tire friction and air resistance.

Key insight: It’s okay to bump another car while racing (as in the video above), but there’s a thin and subjective line between innocuous impacts and those that would give the offender an advantage. In official Gran Turismo Sport competitions — as in real-world races — a human referee makes these calls and penalizes errant drivers. A reinforcement learning algorithm can model such judgments by assigning a cost to each collision, but it must be tuned to avoid an adverse effect on performance: Too high a penalty and drivers become timid, too low and they become dangerous. Penalizing common situations in which a driver typically would be judged at fault, such as rear-ending, side-swiping, and colliding on a curve, should help a neural network learn to drive boldly without ramming its opponents to gain an advantage.

How it works: Given information about the car and its environment, a vanilla neural network decided how to steer and accelerate. The authors trained the network on three virtual tracks and in custom scenarios, such as the slingshot pass, that pitted the model against itself, previous iterations of itself, and the in-game AI.

- Ten times a second, a vanilla neural network decided how much to accelerate or brake and how much to turn left or right depending on several variables: the car’s velocity, acceleration, orientation, weight on each tire, position, the data points that described the environment ahead, the positions of surrounding cars, whether it was colliding with a wall or another car, and whether it was off-course.

- During training, a reinforcement learning algorithm rewarded the model for traveling and for gaining ground on opponents. It applied a penalty for skidding, touching a wall, allowing an opponent to gain ground, going off-course, and colliding with an opponent. It further penalized the typical at-fault scenarios.

- A separate vanilla neural network, given the information about the car and environment, learned to predict the future reward for taking a given action.

- The first network learned to take actions that maximized the predicted future reward.

Results: In time trials, GT Sophy achieved faster lap times than three of the world’s top Gran Turismo Sport drivers. In addition, a team of four GT Sophys faced off against four of the best human drivers in two sets of three head-to-head races held months apart. Points were awarded based on the cars’ final positions: 10 points for first place, 8 for second, 6 for third, and from 5 to 1 point for the remaining positions. The human team won the first set 86 to 70. Then the developers increased the model size and changed some rewards and features, among other tweaks, and the GT Sophy team won the second set 104 to 52.

Why it matters: Unlike board games like Chess and Go in which learning algorithms have beaten human champions, winning a car race requires making complex decisions at high speed while tracing a fine line between nudging and disabling opponents. That said, there’s still a significant gap between doing well in even an exceptionally realistic video game and driving a real car.

We’re thinking: Autonomous driving requires perception, planning, and control. We have little doubt that the latest algorithms can outperform most human drivers in control, but a substantial gap remains in perception and planning.

|

Remix Master

Music generated by learning algorithms got a major push with Apple’s acquisition of a startup that makes automated mash-ups.

What’s new: Apple purchased AI Music, a London startup whose software generates new music from existing recordings, Bloomberg reported.

How it works: Founded in 2016, AI Music reshapes prerecorded music according to user input. Among its projects prior to the acquisition:

- The company developed a platform that analyzes data about users’ listening preferences and adjusts background music in advertisements accordingly, for instance by altering its style.

- It partnered with social network Hornet to generate custom soundtracks for user videos based on a video's content, its existing soundtrack, and a user's choice of style.

- An app called Ossia allowed users to mix one song’s vocals with another’s instrumental backing and offered pre-generated remixes in various moods and styles.

- The company’s CEO previously said that its technology could modify songs in real time according to variables such as a user’s walking pace or the time of day.

Behind the news: AI Music is one of many industrial-scale efforts to generate music in real time, complementing impressive research in the field like MuseNet. (You can read an interview with MuseNet creator Christine Payne here).

- Boomy is an app that can generate a song in a selected style in 30 seconds. It selects chords and melodies automatically. Users can tinker with the result and upload the results to Spotify.

- SAM is a neural network trained on popular songs that can generate both music and lyrics. After generating multiple songs from user input, it compares its creations to existing works to select the least-similar one.

- Aiva composes classical music. Its developers trained it in music theory using reinforcement learning.

Why it matters: Decades ago, Apple’s iTunes service revolutionized digital music distribution. Today, Apple Music has about half as many subscribers as Spotify, the leading distributor of streaming music. Its acquisition of AI Music suggests that it sees generated music as a strategic asset.

We’re thinking: AI systems don’t yet generate great original music, and copyright law for algorithmically generated music is still evolving. That said, a streaming platform that grinds out music for which it owns the copyright could reap ample rewards.

A MESSAGE FROM DEEPLEARNING.AI

|

Learn how to generate images using GANs! The Generative Adversarial Networks (GANs) Specialization covers foundational concepts and advanced techniques in an easy-to-understand approach. Enroll today

|

Robots Don’t Want Human Jobs

Research challenges long-held assumptions about how automation will affect employment.

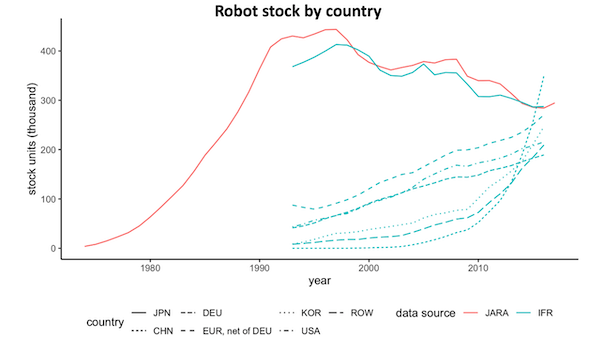

What’s new: Studies in various countries found that automation is associated with more jobs, fewer working hours, and higher productivity, The Economist reported.

What they found: Automation boosted employment in several countries.

- In a 2022 study, researchers at Aalto University and Massachusetts Institute of Technology used natural language processing to analyze 24 years of Finish government and corporate documents. Companies that invested in tools like robots and computer-controlled lathes, mills, and routers saw an average 23 percent rise in employment with no decline in workers’ education levels.

- In 2020, Japan’s Research Institute of Economy, Trade, and Industry studied 30 years of data on robot costs (a proxy for use of robots) and employment levels across numerous industries in Japan since 1978. A 1 percent rise in the use of robots in a given region correlated with a 0.28 percent increase in employment.

- Also in 2020, researchers in France and the UK found that rising automation, reflected by purchases of industrial machinery and consumption of electricity for manufacturing, led to a rise in skilled workers. A 1 percent rise in automation led to an average 0.28 percent increase in employment and a 0.23 percent increase in wages.

Yes, but: While these studies suggest that automation has positive impacts on the workforce, they were conducted in highly developed economies. The situation may vary in other parts of the world, and other studies link robots to lower wages.

Behind the news: Unemployment rates in most of the 38 market-based countries that make up the Organisation for Economic Co-Operation and Development have mostly returned to pre-pandemic levels. This trend runs counter to the fear expressed by some economists amid the first wave of pandemic-driven lockdowns that the shift to remote work would prompt employers to lay off employees and automate their jobs. Japan and South Korea, whose unemployment rates are among the lowest among developed countries — 2.8 percent and 3.1 percent, respectively — are also the world’s most automated economies.

Why it matters: Fear that automatons will take jobs from humans fuels distrust in AI. Research that counters this notion could help improve public confidence in the technology.

We’re thinking: Even if automation fosters job growth on a statistical basis, it clearly threatens specific jobs in specific industries. In such cases, a just society would provide retraining and upskilling programs so that everyone who wants to work can find gainful employment.

|

To Flow or Not to Flow

Networked software is often built using a service-oriented architecture, but networked machine learning applications may be easier to manage using a different programming style.

What's new: Andrei Paleyes, Christian Cabrera, and Neil D. Lawrence at University of Cambridge compared the work required to build a business-oriented machine learning program using a service oriented architecture (SOA) and flow-based programming (FBP).

Key insight: SOA divides a program into services — bundles of functions and memory for, say, navigation, payment processing, and collecting customer ratings in a ride-sharing app — connected to a central hub that passes messages among them. In this arrangement, machine learning applications that draw on large databases generate a high volume of messages, which can require a lot of computation and time spent debugging. FBP, by contrast, conceives a program as a network of functions, or nodes, that exchange data directly with one another. This approach cuts the amount of communication required and makes it easier to track data paths, making it easier to build efficient machine learning programs.

How it works: Over three phases of development, the authors used SOA and FBT to implement taxi-booking applications that took advantage of machine learning. Then they measured the impact of each programming approach on code size, ease of revision, and code complexity.

- In Phase 1, the authors built separate modules that assigned drivers to incoming ride requests, kept track of rides, updated information such as passenger pickup and drop-off times, and measured passenger wait times. SOA called for rider and driver services, while FBP required nodes to handle the interactions among each data stream, such as allocating a ride or calculating the wait time.

- In Phase 2, they added the ability to collect simulated ride requests, driver locations, and rider wait times. Using SOA, they built a new service and modified each previous service to collect the data. Using FBP, they added a node to capture these inputs and outputs.

- In Phase 3, they added a machine learning model trained to estimate passenger wait times using the data collected in Phase 2. The changes required in both approaches were similar. Using FBP, they added a node; using SOA, they added a service.

Results: Both approaches showed distinct benefits. FBP produced a better cognitive complexity score (a measurement of how difficult a code is to understand, where higher is more difficult) in all phases of development. For instance, in Phase 3, FPB scored 1.4 while SOA scored 2.0. On the other hand, the SOA code was easier to revise and less complex in all phases of development. (The authors point out that SOA may have scored higher because it’s more widely used and many libraries exist to reduce code size and complexity. With similar libraries, FBT might catch up.)

Why it matters: FBP provided a better developer experience during data collection, according to the authors’ subjective evaluation. This would allow developers to spend more time optimizing data capture and quality. In addition, reducing the expertise required for data collection could enable machine learning engineers to play a bigger role in that process and improve a model’s performance from the data up.

We’re thinking: Given the ambiguous results, going with the flow might mean sticking with the more familiar SOA approach.