Dear friends,

Last week, I attended the World Economic Forum, an annual meeting of leaders in government, business, and culture at Davos, Switzerland. I spoke in a few sessions, including a lively discussion with Aiden Gomez, Daphne Koller, Yann LeCun, Kai-Fu Lee, and moderator Nicholas Thompson about the present and possible future technology developments of generative AI. You can watch it here.

The conference's themes included AI, climate change, economic growth, and global security. But to me, the whole event felt like an AI conference! (This is not just my bias. When I asked a few non-AI attendees whether they felt similarly, about three-quarters of them agreed with me.) I had many conversations along two major themes:

Business implementation of AI. Many businesses, and to a lesser extent governments, are looking at using AI and trying to develop best practices for doing so. In some of my presentations, I shared my top two tips:

- Almost all knowledge workers can become more productive right away by using a large language model (LLM) like ChatGPT or Bard as a brainstorming partner, copyeditor, tool to answer basic questions, and so on. But many people still need to be trained to use these models safely and effectively. I also encouraged CEOs to learn to use these tools themselves, so they can lead from the top.

- In addition to using an LLM’s web interface, API calls offer many new opportunities to build new AI applications. I shared a task-based analysis framework and described how an analysis like this can lead to buy-versus-build decisions to pursue identified opportunities, with build being either an in-house project or a spin-out.

AI regulation. With many governments represented at Davos, many discussions about AI regulation also took place. I was delighted that he conversation has become much more sensible compared to 6 months ago, when the narrative was driven by misleading analogies between AI and nuclear weapons and lobbyists had significant momentum pushing proposals that threatened open-source software. However, the fight against stifling regulations isn't over yet! We must continue to protect open-source software and innovation. In detail:

- I am happy to report that, in many hours of conversation about AI and regulations, I heard only one person bring up AI leading to human extinction, and the conversation quickly turned to other topics. I'm cautiously optimistic that this particular fear — of an outcome that is overwhelmingly unlikely — is losing traction and fading away.

- However, big companies, especially ones that would rather not have to compete with open source, are still pushing for stifling, anti-competitive AI regulations in the name of safety. For example, some are still using the argument, “don't we want to know if your open-source LLMs are safe?” to promote potentially onerous testing, reporting, and perhaps even licensing requirements on open-source software. While we would, of course, prefer safe models (just as we would prefer secure software and truthful speech), overly burdensome “protections” could still destroy much innovation without materially reducing harm.

- Fortunately, many regulators are now aware of the need to protect basic research and development. The battle is still on to make sure we can continue to freely distribute the fruits of R&D, including open-sourcing software. But I'm encouraged by the progress we've made in the last few months.

I also went to some climate sessions to listen to speakers. Unfortunately, I came away from them feeling more pessimistic about what governments and corporations are doing on decarbonization and climate change. I will say more about this in future letters, but:

- Although some experts still talk about 1.5 degrees of warming as an optimistic scenario and 2 degrees as a pessimistic scenario, my own view after reviewing the science is that 2 degrees is a very optimistic scenario, and 4 degrees is a more realistic pessimistic scenario.

- Unfortunately, this overoptimism is causing us to underinvest in resilience and adaptation (to help us better weather the coming changes) as well as put less effort into exploring potentially game-changing technologies like geo-engineering.

Davos is a cold city where temperatures are often below freezing. In one memorable moment at the conference, I had lost my gloves and my hands were freezing. A stranger whom I had met only minutes ago kindly gave me an extra pair. This generous act reminded me that, even as we think about the global impacts of AI and climate change, simple human kindness touches people's hearts and reminds us that the ultimate purpose of our work is to help people.

Keep learning!

Andrew

P.S. Check out our new short course on “Automated Testing for LLMOps,” taught by CircleCI CTO Rob Zuber! This course teaches how you can adapt key ideas from continuous integration (CI), a pillar of efficient software engineering, to building applications based on large language models (LLMs). Tweaking an LLM-based app can have unexpected side effects, and having automated testing as part of your approach to LLMOps (LLM Operations) helps avoid these problems. CI is especially important for AI applications given the iterative nature of AI development, which often involves many incremental changes. Please sign up here.

News

Early Detection for Pancreatic Cancer

A neural network detected early signs of pancreatic cancer more effectively than doctors who used the usual risk-assessment criteria.

What’s new: Researchers at MIT and oncologists at Beth Israel Medical Center in Boston built a model that analyzed existing medical records to predict the risk that an individual will develop the most common form of pancreatic cancer. The model outperformed commonly used genetic tests.

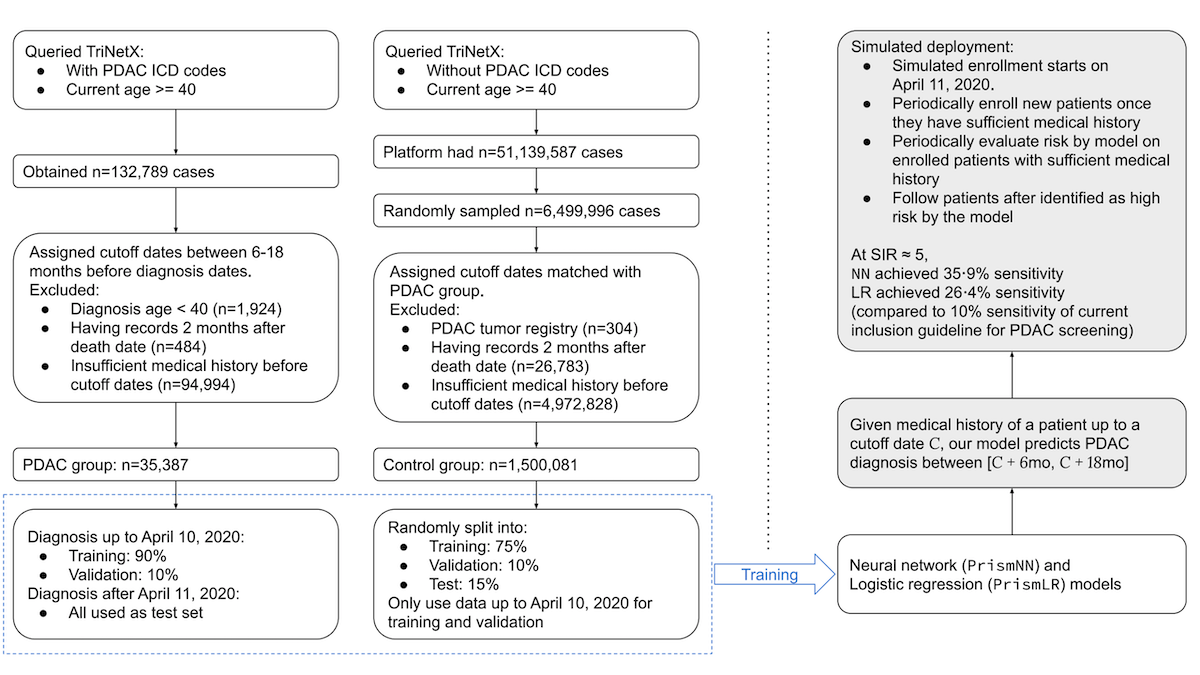

How it works: The authors trained PrismNN, a vanilla neural network, to predict a patient’s risk of receiving a diagnosis of pancreatic ductal adenocarcinoma (PDAC) in the next 6 to 18 months.

- The authors assembled a dataset of roughly 26,250 patients who had developed PDAC and 1.25 million control patients from a proprietary database of anonymized health records from U.S. health care organizations provided by TriNetX (one of the study’s funders). All patients were 40 years or older.

- For each patient, the dataset marked 87 features including age, history of conditions like diabetes and hypertension, presence of pancreatic cysts, and current medications.

- The authors trained the model on their dataset to predict the probability of PDAC in the next 6 to 18 months. At inference, they classified patients as high-risk if the probability exceeded a certain threshold.

Results: PrismNN identified as high-risk 35.9 percent of patients who went on to develop PDAC, with a false-positive rate of 4.7 percent. In comparison, the genetic criteria typically used to identify patients for pancreatic cancer screening flags 10 percent of patients who go on to develop PDAC. The model performed similarly across age, race, gender, and location, although some groups (particularly Asian and Native American patients) were underrepresented in its training data.

Behind the news: AI shows promise in detecting various forms of cancer. In a randomized, controlled trial last year, a neural network recognized breast tumors in mammograms at a rate comparable to human radiologists. In 2022, an algorithm successfully identified tumors in lymph node biopsies.

Why it matters: Cancer of the pancreas is one of the deadliest. Only 11 percent of patients survive for 5 years after diagnosis. Most cases aren’t diagnosed until the disease has reached an advanced stage. Models that can spot early cases could boost the survival rate significantly.

We’re thinking: The fact that this study required no additional testing is remarkable and means the authors’ method could be deployed cheaply. However, the results were based on patients who had already been diagnosed with cancer. It remains for other teams to replicate them with patients who have not received a diagnosis, perhaps followed by a randomized, controlled clinical trial.

AI Creates Jobs, Study Suggests

Europeans are keeping their jobs even as AI does an increasing amount of work.

What’s new: Researchers at the European Central Bank found that employment in occupations affected by AI rose over nearly a decade.

How it works: The authors considered jobs that were found to be affected by AI over the past decade according to two studies. As a control group, they considered jobs affected by software generally (“recording, storing, and producing information, and executing programs, logic, and rules”), as detailed in one of the studies. They measured changes in employment and wages in those jobs based on a survey of workers in 16 European countries between 2011 and 2019.

Results: The researchers found that exposure to AI was associated with greater employment for some workers and had little effect on wages.

- Employment of high-education workers rose in jobs affected by AI. This result argues against the hypothesis that AI displaces high-skilled occupations.

- Employment also rose among younger workers in jobs affected by AI.

- Employment and wages among low-education workers and older workers fell in jobs affected by software. This effect was far less pronounced in jobs affected by AI.

- Wages barely changed in jobs affected by AI. Wages fell slightly by one of the three metrics they considered.

Behind the news: Other studies suggest that automation in general and AI technology in particular may benefit the workforce as a whole.

- The United States Bureau of Labor Statistics found that employment in the U.S. in 11 occupations most exposed to AI, such as translators, personal financial advisers, and fast-food workers, grew by 13.6 percent between 2008 and 2018.

- Economic research in France, the UK, and Japan suggests that industrial automation correlates with increased employment and higher wages.

Yes, but: It may be too soon to get a clear view of AI’s impact on employment, the authors point out. The data that underlies every study to date ends in 2019, predating ChatGPT and the present wave of generative AI. Furthermore, the impact of AI in European countries varies with their individual economic conditions (for instance, Greece tends to lose more jobs than Germany).

Why it matters: Many employees fear that AI — and generative AI in particular — will take their jobs. Around the world, the public is nervous about the technology’s potential impact on employment. Follow-up studies using more recent data could turn these fears into more realistic — and more productive — appraisals.

We’re thinking: AI is likely to take some jobs. We feel deeply for workers whose livelihoods are affected, and society has a responsibility to create a safety net to help them. To date, at least, the impact has been less than many observers feared. One reason may be that jobs are made up of many tasks, and AI automates tasks rather than jobs. In many jobs, AI can automate a subset of the work while the jobs continue to be filled by humans, who may earn a higher wage if AI helps them be more productive.

A MESSAGE FROM DEEPLEARNING.AI

Automated testing of applications based on large language models can save significant development time and cost. In this course, you’ll learn to build a continuous-integration pipeline to evaluate LLM-based apps at every change and fix bugs early for efficient, cost-effective development. Enroll for free

Sovereign AI

Governments want access to AI chips and software built in their own countries, and they are shelling out billions of dollars to make it happen.

What’s new: Nations across the world are supporting homegrown AI processing and development, The Economist reported.

How it works: Governments want AI they can rely upon for state use. The U.S. and China each promised to invest around $40 billion in the field in 2023. Another 6 countries — France, Germany, India, Saudi Arabia, the UAE, and the UK — pledged a combined $40 billion. Different governments are emphasizing different capabilities.

- The U.S., home to tech powers like Amazon, Google, Microsoft, and OpenAI, has left the software sector largely to its own devices. However, the federal government has subsidized the semiconductor industry with a five-year commitment to spend $50 billion on new factories and devoted much smaller amounts to research.

- China also seeks to bolster its semiconductor industry, especially in the face of U.S. export restrictions on AI chips. The government spent $300 billion between 2021 and 2022 trying to build a domestic chip manufacturing industry. In addition, the state cracked down on some tech areas (such as video games) to redirect economic resources toward higher-priority areas, established data exchanges where businesses can make data available for AI development, and created public-private partnerships that support development of advanced technology.

- Saudi Arabia and the UAE are buying up GPUs and investing in universities like Abu Dhabi’s Mohamed bin Zayed University of Artificial Intelligence and Thuwal’s King Abdullah University of Science and Technology to attract global engineering talent. The UAE plans to make available national datasets in sectors like health and education to local startups such as AI71.

- France, Germany, India, and the UK are supporting their own AI startups. France provides public data for AI development. India is courting cloud-computing providers to build data centers in the country and considering a $1.2 billion investment in GPUs.

Behind the news: Even as governments move toward AI independence, many are attempting to influence international politics and trade to bolster their positions.

- As EU lawmakers negotiated the final details of the AI Act, France, Germany, and Italy managed to relax the Act’s restrictions on foundation models. These countries worry that strong restrictions would hamper domestic developers such as France’s Mistral and Germany’s Aleph Alpha and stifle innovation and open source more broadly.

- In September 2022, the U.S. government blocked exports of advanced GPUs and chip-making equipment to most Chinese customers. The sanctions threaten even non-U.S. companies that try to circumvent the restrictions. Consequently, in December, the UAE-based AI developer G42 cut ties with Chinese equipment suppliers. Earlier, the U.S. had extended the restrictions to some Middle Eastern countries including the UAE and Saudi Arabia.

Why it matters: AI has emerged as an important arena for international competition, reshaping global society and economics, generating economic growth, and affecting national security. For engineers, the competition means that governments are competing to attract talent and investment, but they’re also less inclined to share technology across borders.

We’re thinking: We understand governments’ desires to ensure access to reliable AI, but focusing on sovereignty above all is misguided. In a networked world, developments can’t be contained to one country. Cooperation ensures that development proceeds at a rapid pace and benefits everyone.

Learning the Language of Geometry

Machine learning algorithms often struggle with geometry. A language model learned to prove relatively difficult theorems.

What's new: Trieu Trinh, Yuhuai Wu, Quoc Le, and colleagues at Google and New York University proposed AlphaGeometry, a system that can prove geometry theorems almost as well as the most accomplished high school students. The authors focused on non-combinatorial Euclidean plane geometry.

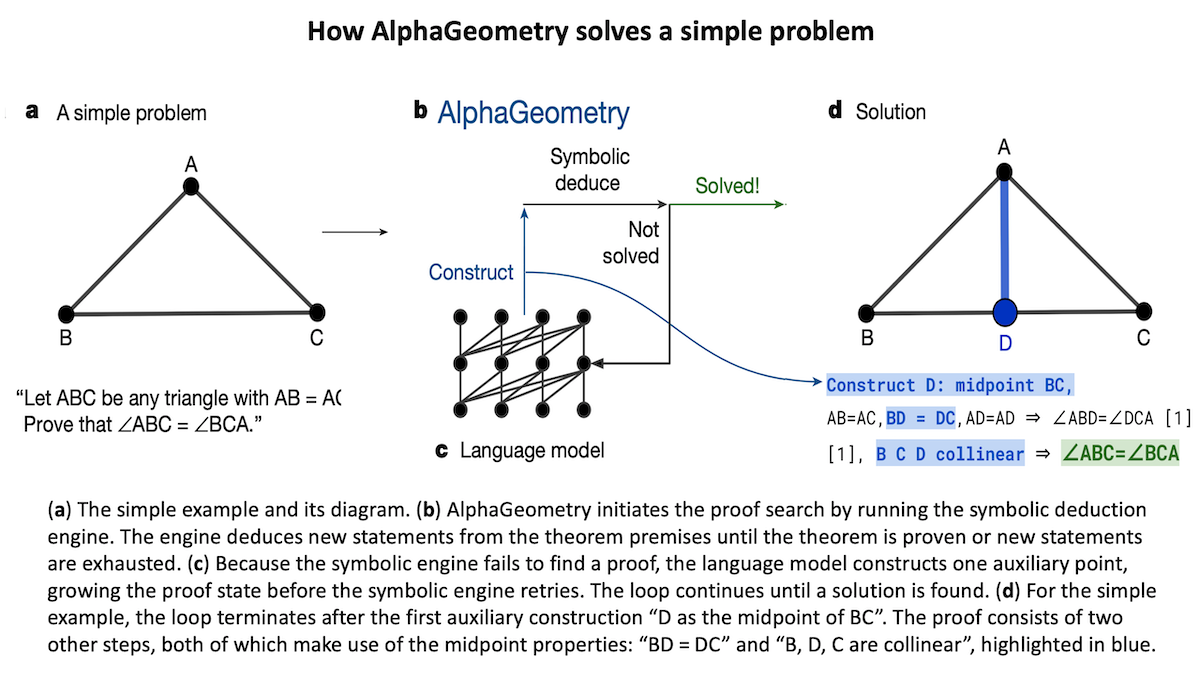

How it works: AlphaGeometry has two components. (i) Given a geometrical premise and an unproven proposition, an off-the-shelf geometric proof finder derived statements that followed from the premise. The authors modified the proof finder to deduce proofs from not only geometric concepts but also algebraic concepts such as ratios, angles, and distances. (ii) A transformer learned to read and write proofs in the proof finder’s specialized language.

- The authors generated a synthetic dataset of 100 million geometric premises, propositions, and their proofs. For instance, given the premise, “Let ABC be any triangle with AB = AC” (an isosceles triangle) and the proposition “∠ABC = ∠BCA,” the proof involves constructing a line between A and the midpoint between B and C. The authors translated these problems into the proof finder’s language. They pretrained the transformer, given a premise and proposition, to generate the proof.

- The authors modified 9 million proofs in the dataset to remove references to some lines, shapes, or points from premises. Instead, they introduced these elements in statements of the related proofs. They fine-tuned the transformer, given a modified premise, the proposition, and the proof up to that point, to generate the added elements.

- At inference, given a premise and proposition, the proof finder added statements. If it failed to produce the proposition, the system fed the statements so far to the transformer, which predicted a point, shape, or line that might be helpful in deducing the next statement. Then it gave the premise, proposition, and proof so far — including the new element — to the proof finder. The system repeated the process until the proof finder produced the proposition.

Results: The authors tested AlphaGeometry on 30 problems posed by the International Mathematical Olympiad, an annual competition for high school students. Comparing that score to human performance isn’t so straightforward because human competitors can receive partial credit. Human gold medalists since 2000 solved 25.9 problems correctly, silver medalists solved 22.9 problems, and bronze medalists solved 19.3 problems. The previous state-of-the-art approach solved 10 problems, and the modified proof finder solved 14 problems. In one instance, the system identified an unused premise and found a more generalized proof than required, effectively solving many similar problems at once.

Why it matters: Existing AI systems can juggle symbols and follow simple rules of deduction, but they struggle with steps that human mathematicians represent visually by, say, drawing a diagram. It’s possible to make up this deficit by (i) alternating between a large language model (LLM) and a proof finder, (ii) combining geometric and algebraic reasoning, and (ii) training the LLM on a large data set. The result is a breakthrough for geometric problem solving.

We're thinking: In 1993, the teenaged Andrew Ng represented Singapore in the International Mathematics Olympiad, where he won a silver medal. AI’s recent progress in solving hard problems is a sine of the times!

Data Points

This week's latest updates include an affordable reinforcement-learning-powered robot, exciting AI features in Samsung’s new smartphone series, an improved model for code completion from Stability AI, and much more. Catch up with the help of Data Points, a spin-off of The Batch.