Dear friends,

As we enter the new year, let’s view 2023 not as a single year, but as the first of more in which we will accomplish our long-term goals. Some results take a long time to achieve, and even though we may take actions that bring those results closer, we can do it more effectively if we envision a path rather than simply going from milestone to milestone.

When I was younger, I hardly connected short-term actions concretely to long-term outcomes. I would focus on the next homework assignment, project, or research paper with a vague 10-year goal, lacking a clear path to get there. With experience, I got better at seeing how these efforts could lead to goals that can be achieved only in years.

For instance, 10 years ago, I built my first machine learning course one week at a time (often filming at 2 a.m.). Building the updated Machine Learning Specialization this year, I was able to plan the full course better (and while some filming was still done at 2 a.m., there was less!). In previous businesses, I tended to build a product and only then think about how to take it to customers. These days, I’m more likely to see the big picture even when starting out.

Feedback from friends and mentors can help you shape your vision. A big step in my growth was learning to trust advice from certain experts and mentors — even when I didn’t follow their reasoning — and work hard to understand it. For example, my friends who are experts in global geopolitics sometimes advise me to invest more heavily in particular countries. I would not have come to this conclusion by myself, because I don’t know those countries well. But I’ve learned to explain my long-term plan, solicit their feedback, and listen carefully when they point me in a different direction.

Right now, one of my top goals is to democratize the creation of AI. Having a lot more people able to build custom AI systems will lift up many people. While the path to accomplishing this is long and hard, I can see the steps to get there, and the critiques of friends and mentors have shaped my thinking significantly.

As 2023 approaches, how far into the future can you make plans? Do you want to achieve expertise in a topic, advance your career, or solve a technical problem? By forming a hypothesis of the path — even an untested one — and soliciting feedback to test and refine it, I hope you can shape a vision that inspires and drives you forward.

Dream big for 2023 and beyond!

Happy new year,

Andrew

Get Ready for 2023!

Spring came early in 2022, as what some observers had feared was an impending AI Winter melted into a garden of innovations with potential uses in fields as diverse as art, genomics, and chip design. Dark clouds lingered; generative models continued to produce problematic output, and international tensions flared as the U.S. took steps to block China’s access to AI chips. Yet optimism was palpable in social media, conference proceedings, and venture investment, and the next 12 months promise an abundance of AI progress. In this special issue of The Batch, leaders in the field share their hopes for the coming year.

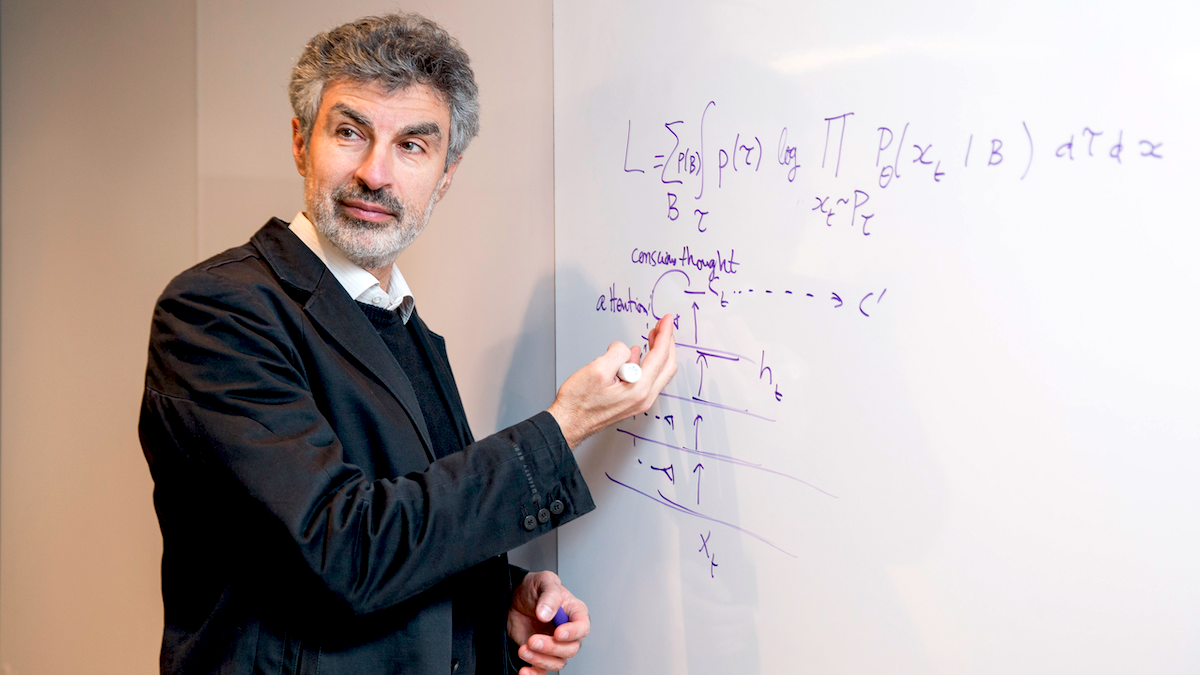

Yoshua Bengio: Models That Reason

Recent advances in deep learning largely have come by brute force: taking the latest architectures and scaling up compute power, data, and engineering. Do we have the architectures we need, and all that remains is to develop better hardware and datasets so we can keep scaling up? Or are we still missing something?

I believe we’re missing something, and I hope for progress toward finding it in the coming year.

I’ve been studying, in collaboration with neuroscientists and cognitive neuroscientists, the performance gap between state-of-the-art systems and humans. The differences lead me to believe that simply scaling up is not going to fill the gap. Instead, building into our models a human-like ability to discover and reason with high-level concepts and relationships between them can make the difference.

Consider the number of examples necessary to learn a new task, known as sample complexity. It takes a huge amount of gameplay to train a deep learning model to play a new video game, while a human can learn this very quickly. Related issues fall under the rubric of reasoning. A computer needs to consider numerous possibilities to plan an efficient route from here to there, while a human doesn’t.

Humans can select the right pieces of knowledge and paste them together to form a relevant explanation, answer, or plan. Moreover, given a set of variables, humans are pretty good at deciding which is a cause of which. Current AI techniques don’t come close to this human ability to generate reasoning paths. Often, they’re highly confident that their decision is right, even when it’s wrong. Such issues can be amusing in a text generator, but they can be life-threatening in a self-driving car or medical diagnosis system.

Current systems behave in these ways partly because they’ve been designed that way. For instance, text generators are trained simply to predict the next word rather than to build an internal data structure that accounts for the concepts they manipulate and how they are related to each other. But I think we can design systems that track the meanings at play and reason over them while keeping the numerous advantages of current deep learning methodologies. In doing so, we can address a variety of challenges from excessive sample complexity to overconfident incorrectness.

I’m excited by generative flow networks, or GFlowNets, an approach to training deep nets that my group started about a year ago. This idea is inspired by the way humans reason through a sequence of steps, adding a new piece of relevant information at each step. It’s like reinforcement learning, because the model sequentially learns a policy to solve a problem. It’s also like generative modeling, because it can sample solutions in a way that corresponds to making a probabilistic inference.

If you think of an interpretation of an image, your thought can be converted to a sentence, but it’s not the sentence itself. Rather, it contains semantic and relational information about the concepts in that sentence. Generally, we represent such semantic content as a graph, in which each node is a concept or variable. GFlowNets generate such graphs one node or edge at a time, choosing which concept should be added and connected to which others in what kind of relation.

I don’t think this is the only possibility, and I look forward to seeing a multiplicity of approaches. Through a diversity of exploration, we’ll increase our chance to find the ingredients we’re missing to bridge the gap between current AI and human-level AI.

Yoshua Bengio is a professor of computer science at Université de Montréal and scientific director of Mila - Quebec AI Institute. He received the 2018 A.M. Turing Award, along with Geoffrey Hinton and Yann LeCun, for his contribution to breakthroughs in deep learning.

Alon Halevy: Your Personal Data Timeline

The important question of how companies and organizations use our data has received a lot of attention in the technology and policy communities. An equally important question that deserves more focus in 2023 is how we, as individuals, can take advantage of the data we generate to improve our health, vitality, and productivity.

We create a variety of data throughout our days. Photos capture our experiences, phones record our workouts and locations, Internet services log the content we consume and our purchases. We also record our want-to lists: desired travel and dining destinations, books and movies we plan to enjoy, and social activities we want to pursue. Soon smart glasses will record our experiences in even more detail. However, this data is siloed in dozens of applications. Consequently, we often struggle to retrieve important facts from our past and build upon them to create satisfying experiences on a daily basis.

But what if all this information were fused in a personal timeline designed to help us stay on track toward our goals, hopes, and dreams? The idea is not new. Vannevar Bush envisioned it in 1945, calling it a memex. In the 90’s, Gordon Bell and his colleagues at Microsoft Research built MyLifeBits, a prototype of this vision. The prospects and pitfalls of such a system have been depicted in film and literature.

Privacy is obviously a key concern in terms of keeping all our data in a single repository and protecting it against intrusion or government overreach. Privacy means that your data is available only to you, but if you want to share parts of it, you should be able to do it on the fly by uttering a command such as, “Share my favorite cafes in Tokyo with Jane.” No single company has all our data or the trust to store all our data. Therefore, building technology that enables personal timelines should be a community effort that includes protocols for the exchange of data, encrypted storage, and secure processing.

Building personal timelines will also force the AI community to pay attention to two technical challenges that have broader application.

The first challenge is answering questions over personal timelines. We’ve made significant progress on question answering over text and multimodal data. However, in many cases, question answering requires that we reason explicitly about sets of answers and aggregates computed over them. This is the bread and butter of database systems. For example, answering “what cafes did I visit in Tokyo?” or “how many times did I run a half marathon in under two hours?” requires that we retrieve sets as intermediate answers, which is not currently done in natural language processing. Borrowing more inspiration from databases, we also need to be able to explain the provenance of our answers and decide when they are complete and correct.

The second challenge is to develop techniques that use our timelines, responsibly, for improved personal well-being. Taking inspiration from the field of positive psychology, we can all flourish by creating positive experiences for ourselves and adopting better habits. An AI agent that has access to our previous experiences and goals can give us timely reminders and suggestions of things to do or avoid.

Ultimately, what we choose to do is up to us, but I believe that an AI with a holistic view of our day-to-day activities, better memory, and superior planning capabilities would benefit everyone.

Alon Halevy is a director at the Reality Labs Research branch of Meta. His hopes for 2023 represent his personal opinion and not that of Meta.

Douwe Kiela: Less Hype, More Caution

This year we really started to see AI go mainstream. Systems like Stable Diffusion and ChatGPT captured the public imagination to an extent we haven’t seen before in our field. These are exciting times, and it feels like we are on the cusp of something great: a shift in capabilities that could be as impactful as — without exaggeration — the industrial revolution.

But amidst that excitement, we should be extra wary of hype and extra careful to ensure that we proceed responsibly.

Consider large language models. Whether or not such systems really “have meaning,” lay people will anthropomorphize them anyway, given their ability to perform arguably the most quintessentially human thing: to produce language. It is essential that we educate the public on the capabilities and limitations of these and other AI systems, especially because the public largely thinks of computers as good old-fashioned symbol-processors — for example, that they are good at math and bad at art, while currently the reverse is true.

Modern AI has important and far-reaching shortcomings. Systems are too easily misused or abused for nefarious purposes, intentionally or inadvertently. Not only do they hallucinate information, they do so with seemingly very high confidence and without the ability to attribute or credit sources. They lack a rich-enough understanding of our complex multimodal human world and do not possess enough of what philosophers call “folk psychology,” the capacity to explain and predict the behavior and mental states of other people. They are arguably unsustainably resource-intensive, and we poorly understand the relationship between the training data going in and the model coming out. Lastly, despite the unreasonable effectiveness of scaling — for instance, certain capabilities appear to emerge only when models reach a certain size — there are also signs that with that scale comes even greater potential for highly problematic biases and even less-fair systems.

My hope for 2023 is that we’ll see work on improving all of these issues. Research on multimodality, grounding, and interaction can lead to systems that understand us better because they understand our world and our behavior better. Work on alignment, attribution, and uncertainty may lead to safer systems less prone to hallucination and with more accurate reward models. Data-centric AI will hopefully show the way to steeper scaling laws, and more efficient ways to turn data into more robust and fair models.

Finally, we should focus much more seriously on AI’s ongoing evaluation crisis. We need better and more holistic measurements — of data and models — to ensure that we can characterize our progress and limitations, and understand, in terms of ecological validity (for instance, real-world use cases), what we really want out of these systems.

Douwe Kiela is an adjunct professor in symbolic systems at Stanford University. Previously, he was the head of research at Hugging Face and a research scientist at Facebook AI Research.

A MESSAGE FROM DEEPLEARNING.AI

In 2022 our amazing Pie & AI ambassadors hosted over 100 events in 66 cities around the globe! Here is a heartfelt thank you to all of them, from everyone at DeepLearning.AI. Read some of their experiences here

Been Kim: A Scientific Approach to Interpretability

It’s an exciting time for AI, with fascinating advances in generated media and many other applications, some even in science and medicine. Some folks may dream about what more AI can create and how much bigger models we may engineer. While those directions are exciting, I argue that we need to pursue much less flashy work: going back to the basics and studying AI models as targets of scientific inquiry.

Why and how? The field of interpretability aims to create tools to generate “explanations” for the output of complex models. This field has emerged naturally out of a need to build machines that we can have a dialog with: What is your decision? Why did you decide that? For example, a tool takes an image and a classification model, and generates explanations in the form of weighted pixels. The higher a pixel’s weight, the more important it is. For instance, the more its value affects the output, the more important it may be — but the definition of importance differs depending on the tool.

While there are some successes, many tools have turned out to behave in ways we did not expect. For example, it has been shown that explanations of an untrained model are quantitatively and qualitatively indistinguishable to those of a trained model. (Then what does the explanation explain?) Explanations often change with small changes in the input despite resulting in the same output. In addition, there isn’t much causal relationship between a model’s output (what we are trying to explain) and the tool’s explanation. Other work shows that good explanations of a model’s output don't necessarily have a positive influence on how people use the model.

What does this mismatch between expectation and outcome mean, and what should we do about it? It suggests that we need to examine how we build these tools.

Currently we take an engineering-centric approach: trial and error. We build tools based on intuition (for instance, explanations would be more intuitive for humans if we generate a weight per a chunk of pixels instead of individual pixels). While the engineering-centric approach is useful, we also need fundamental principles (what can be called science) to build better tools.

In developing drugs, for instance, trial and error is essential (say, testing a new medicine through rigorous clinical trials before deploying it), but it goes hand-in-hand with sciences like biology and genetics. While science has many gaps in understanding how the human body works, it provides fundamental principles in creating the tool (in this case, drugs). In other words, pursuing both science and engineering simultaneously, such that each can inform the other, has shown to be a successful way to work with complex beings (humans).

The field of machine learning needs to study our complex aliens (models) like other disciplines study humans. How would such study of these aliens help interpretability? Here’s an example. A team at the University of Tübingen found that neural networks see texture (say, an elephant’s skin) more than shape (an elephant’s outline). Even if we see an elephant’s contour in the explanation of an image — perhaps in the form of collective highlighted pixels — the study informs us that the model may not be seeing the shape but rather the texture. This is called inductive bias — a tendency of a particular class of models due to either its architecture or the way we optimize it. Revealing such tendencies can help us understand this alien, just as revealing a human’s tendency (bias) can be used to understand human behavior (such as unfair decisions).

In this way, the methods often used to understand humans can also help us understand AI models. These include observational studies (say, observing multi-agents from afar to infer emerging behaviors), controlled studies (for instance, intervening in a multi-agent system to elicit underlying behaviors), and surgery (such as examining the internals of the superhuman chess player AlphaZero). For AI models, thanks to the way their internals are built — they are made of math! — we have one more tool: theoretical analysis. Work along this direction has already yielded exciting theoretical results on the behaviors of models, optimizers, and loss functions. Some take advantage of classical tools in statistics, physics, dynamical systems, or signal processing. Many tools from different fields are yet to be explored in the study of AI.

Pursuing science doesn’t mean we should stop engineering. The two go hand in hand: Science will enable us to build tools under principles and knowledge, while engineering enables science to become practical. Engineering can also inspire science: What works well in practice can provide hints to structures of models that we wish to formalize in science, just like the high-performance of convolutional networks in 2012 inspired many theory papers that tried to analyze why convolutions help generalization.

I’m excited to enter 2023 and many other years to come as we advance our understanding of our aliens and invent ways to communicate with them. By enabling a dialogue, we will enable richer collaborations and better leverage the complementary skill sets of humans and machines.

Been Kim is a research scientist at Google Brain. Her work on helping humans to communicate with complex machine learning models won the UNESCO Netexplo award.

Reza Zadeh: Active Learning Takes Off

As we enter the new year, there is a growing hope that the recent explosion of generative AI will bring significant progress in active learning. This technique, which enables machine learning systems to generate their own training examples and request them to be labeled, contrasts with most other forms of machine learning, in which an algorithm is given a fixed set of examples and usually learns from those alone.

Active learning can enable machine learning systems to:

- Adapt to changing conditions

- Learn from fewer labels

- Keep humans in the loop for the most valuable/difficult examples

- Achieve higher performance

The idea of active learning has been in the community for decades, but it has never really taken off. Previously, it was very hard for a learning algorithm to generate images or sentences that were simultaneously realistic enough for a human to evaluate and useful to advance a learning algorithm.

But with recent advances in generative AI for images and text, active learning is primed for a major breakthrough. Now, when a learning algorithm is unsure of the correct label for some part of its encoding space, it can actively generate data from that section to get input from a human.

Active learning has the potential to revolutionize the way we approach machine learning, as it allows systems to continuously improve and adapt over time. Rather than relying on a fixed set of labeled data, an active learning system can seek out new information and examples that will help it better understand the problem it is trying to solve. This can lead to more accurate and effective machine learning models, and it could reduce the need for large amounts of labeled data.

I have a great deal of hope and excitement that active learning will build upon the recent advances in generative AI. As we enter the new year, we are likely to see more machine learning systems that implement active learning techniques, and it is possible that 2023 could be the year that active learning truly takes off.

Reza Zadeh is founder and CEO at Matroid, a computer vision company, an adjunct professor at Stanford, and an early member of Databricks. Twitter: @Reza_Zadeh.