Dear friends,

I’m grateful to the AI community for the friendships it has brought me and the benefits it has brought to billions of people. But members of the AI community don’t always honor one another. In the spirit of Thanksgiving, which we in the U.S. celebrate this week, I’d like to talk about how we can treat each other with greater civility.

While AI has done much good, it has also created adverse effects. Machine learning systems have perpetuated harmful stereotypes, generated results that treat some minority groups unfairly, aided the spread of disinformation, and enabled some governments to oppress their citizens. It’s up to us to find, call out, and solve these problems.

But there’s a difference between airing problems so we can work toward a solution and attacking fellow AI developers for their perceived sins. We’re sometimes too quick to attack each other on social media when we have disagreements. Misdirected criticisms can go viral before a correction can catch up.

I’ve seen many events that people may have misconstrued:

- A workshop had a slate of invited speakers who were all of one gender and lacked diversity in other dimensions. The organizer must have been biased, right? Actually, the group was fairly diverse until several speakers unexpectedly canceled at the last minute, leaving a homogeneous slate.

- A vision algorithm favored a light skinned person over a dark skinned person. Clearly the algorithm was racist, and possibly the people who built it as well, right? But when its performance was examined on a larger set of data, this appeared to be an isolated example rather than a pervasive trend.

- Members of a majority group found a certain word derogatory toward a particular minority and had it removed from public communications. Anyone using it must be insensitive and ignorant, right? It turned out the minority group in question didn’t consider the word derogatory. Perhaps the critics were mistaken.

|

To be clear, the AI world has problems. I don’t want anyone to shy away from addressing them. When you come across a pressing issue, here are suggestions that might encourage productive conversation:

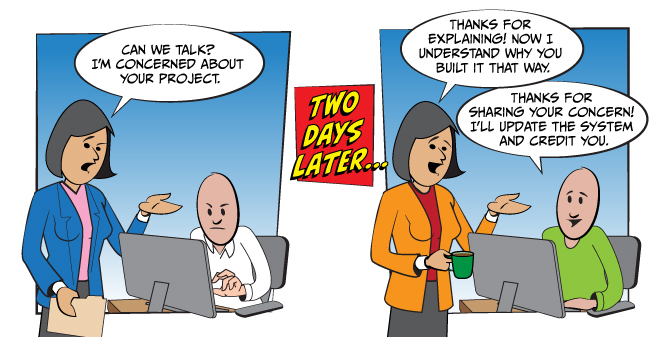

- Reach out privately. When you see someone doing something you consider problematic — perhaps even unethical — give them a chance to explain why, or make sure someone else has, before you fire off that explosive tweet. Perhaps they had an innocent, or even positive, reason for their actions that you weren’t aware of.

- Encourage transgressors to correct their mistakes. If you find that a scientist has made an error, try privately to persuade them to publish a correction or retraction. That can fix the problem while preserving their dignity. If you reach out and find them immovable or they refuse to engage, you can still call them out publicly and make sure the truth gets out.

- Don’t be cowed. If you find a real problem, and you’ve spoken with the people at the center of it and found that more needs to be said in public, go for it! If you’re not sure, consider asking colleagues to help you double-check your thinking, consider other perspectives, and gather allies who can help you push forward.

As we wrestle with important issues around values, ethics, diversity, and responsibility, let’s keep our arguments civil and support discussions that focus on solving problems rather than public shaming. In addition to being civil yourself, I ask you also to encourage others to be civil, and think twice before repeating or amplifying messages that aren’t. The AI community faces difficult challenges, and working together will make us more effective in wrestling with them.

Happy Thanksgiving and keep learning!

Andrew

News

|

When Officials Share Personal Data

The government of South Korea is supplying personal data to developers of face recognition algorithms.

What’s new: The South Korean Ministry of Justice has given the data profiles of more than 170 million international and domestic air travelers to unspecified tech companies, the news service Hankyoreh reported. The distribution of personal data without consent may violate the country’s privacy laws.

How it works: The government collects data on travelers at Incheon International Airport, the country’s largest airport. It gives facial portraits along with the subjects’ nationality, gender, and age to contractors building a system that would screen people passing through Incheon’s customs and immigration facility. The project began in 2019 and is scheduled for completion in 2022.

- Last year, South Korea passed along data describing 57.6 million Korean citizens and 120 million foreign nationals.

- Another system in development is intended to recognize unusual behavior based on videos of travelers in motion and images of atypical behavior.

- The Ministry of Justice argues that South Korea’s Personal Information Protection Act, which bans the collection, use, and disclosure of personal data without prior informed consent, doesn’t require consent if personal data is used for purposes related to the reason it was collected.

- A coalition of civic groups pledged to file a lawsuit on behalf of foreign and domestic individuals whose images were used.

Why it matters: Face recognition is an attractive tool for making travel safer and more efficient. But data is prone to leaking, and face recognition infrastructure can be pressed into service for other, more corruptible purposes. In the South Korean city of Buncheon, some 10,000 cameras originally installed in public places to fight crime are feeding a “smart epidemiological investigation system” that will track individuals who have tested positive for infectious diseases, scheduled to begin operation in January 2022, Hankyoreh reported. The city of Ansen is building a system that will alert police when it recognizes emotional expressions that might signal child abuse, scheduled to roll out nationwide in 2023. Given what is known about the efficacy of AI systems that recognize emotional expressions, never mind the identity of a face, such projects demand the highest scrutiny.

We’re thinking: Face recognition is a valuable tool in criminal justice, national security, and reunifying trafficked children with their families. Nonetheless, the public has legitimate concerns that such technology invites overreach by governments and commercial interests. In any case, disseminating personal data without consent — and possibly illegally — can only erode the public’s trust in AI systems.

|

Long-Haul Chatbot

State-of-the-art chatbots typically are trained on short dialogs. Consequently they often respond with off-point statements in extended conversations. To improve that performance, researchers developed a way to track context throughout a conversation.

What's new: Jing Xu, Arthur Szlam, and Jason Weston at Facebook released a chatbot that summarizes dialog on the fly and uses the summary to generate further repartee.

Key insight: Chatbots based on the transformer architecture typically generate replies by analyzing up to 1,024 of the most recent tokens (usually characters, words, or portions of words). Facebook previously used a separate transformer to determine which earlier statements were most relevant to a particular reply — but in long conversations, the relevant statements may encompass more than 1,024 tokens. Summarizing such information can give a model access to more context than is available to even large, open-domain chatbots like BlenderBot, Meena, and BART.

How it works: The authors built a dataset of over 5,000 conversations. They trained a system of three transformers respectively to summarize conversations as they occurred, select the five summaries most relevant to the latest back-and-forth turn, and generate a response.

- The authors recorded text chats between pairs of volunteers. Each conversation consisted of three or four sessions (up to 14 messages each) separated by pauses that lasted up to seven days.

- After each session, a volunteer summarized the session to serve as reference for subsequent sessions (which may involve different conversants). In addition, the volunteer either summarized each turn or marked it with a label indicating that no summary was needed.

- A BlenderBot, given a dialog from the start through each turn, learned to match the turn-by-turn summaries.

- A dense passage retriever, pretrained on question-answer pairs, ranked and selected the turn-by-turn summaries most relevant to the session so far according to nearest neighbor search.

- A separate BlenderBot received the top summaries and generated the next response.

Results: Human evaluators compared the authors’ model to a garden-variety BlenderBot, which draws context from the most recent 128 tokens. They scored the authors’ model an average 3.65 out of 5 compared with the BlenderBot’s 3.47. They found 62.1 percent of its responses engaging versus 56.5 percent of the BlenderBot’s responses.

Why it matters: After much work on enabling chatbots to discuss a variety of topics, it’s good to see improvement in their ability to converse at length. Conversation is inherently dynamic, and if we want chatbots to keep up with us, we need them to ride a train of thought, hop off the line, switch to a new rail, and shift back to the first — all without losing track.

We're thinking: If Facebook were to use this system to generate chatter on the social network, could we call its output Meta data? (Hat tip to Carol-Jean Wu!).

A MESSAGE FROM DEEPLEARNING.AI

-2.png?upscale=true&width=1200&upscale=true&name=The%20Batch%20Image%20(2)-2.png) |

Check out the Generative Adversarial Networks Specialization! Created by leading experts, this specialization will equip you with the foundational knowledge and hands-on training you need to build powerful GANs. Enroll now

|

Deep Learning for Deep Frying

A robot cook is frying to order in fast-food restaurants.

Hot off the grill: Flippy 2, a robotic fry station from California-based Miso Robotics, has been newly deployed in a Chicago White Castle location. It operates without a human in the loop to boost throughput, reduce contamination, and perform tasks traditionally allotted to low-paid workers.

Special sauce: The robot’s arm slides on an overhead rail. It grabs baskets of raw french fries, chicken wings, onion rings, or what have you, places them in boiling oil, and unloads the finished product — fried to automated perfection — into a chute that conveys cooked food into trays.

- The arm is equipped with thermal-imaging cameras and uses computer vision to locate and classify foods in the baskets.

- Miso can customize the system to recognize different foods and adjust cooking times and temperatures. The company adjusted it to prepare chicken wings for Buffalo Wild Wings.

- Flippy 2 units are available to rent for around $3,000 a month in a business approach known as robots as a service.

A chef’s tale: Flippy 2’s arm pivoted from grilling hamburgers to deep frying. In 2018, its bulkier predecessor’s first job was flipping patties at a Pasadena, California, branch of the CaliBurger chain (owned by CaliGroup, which also owns Miso Robotics). It was taken out of service the next day owing to a crush of novelty-seeking patrons and difficulty placing cooked burgers on a tray, which prompted retraining. Nonetheless, Miso’s emphasis appears to have shifted to frying, and the machine went on to prepare chicken tenders and tater tots at Dodger Stadium, and later french fries and onion rings at White Castle.

Why It Matters: Fast food’s high-output, repetitive tasks are well suited to automation. The work can be hot, grueling, and low-wage, leading to turnover of employees that approaches 100 percent annually. Fast-food restaurants in the U.S. are experiencing a wave of walkouts as workers seek higher wages and better working conditions. Robots might pick up the slack — for better or worse.

Food for thought: We’ve seen several robotics companies take off as labor shortages related to the pandemic have stoked demand in restaurants and logistics. While the machines will help feed hungry patrons, they’ll also make it harder for humans to get jobs. Companies, institutions, and governments need to establish programs to train displaced employees for jobs that humans are likely to retain.

|

U.S. AI Strategy In Gear

An independent commission charged with helping the United States prepare for an era of AI-enabled warfare disbanded last month. Many of its recommendations already are being implemented.

What’s new: Wired examined the legacy of the National Security Commission. Its recommendations have been enshrined in over 190 laws this year alone.

What they accomplished: The commission, whose 15 members were drawn from government, academia and industry (including executives from Amazon, Google, and Oracle), was founded in 2018 and delivered its final report earlier this year. It took a broad view that emphasized nurturing AI talent and fostering international cooperation (under U.S. leadership). It also recommended integrating AI into regular military operations, using the technology to drive intelligence gathering and analysis, and developing fully autonomous weapons (for use only when authorized by commanders in accordance with international law).

- Lawmakers incorporated 19 of the committee’s recommendations into the 2021 National Defense Authorization Act, former commission spokesperson Tara Rigler told The Batch. These address military needs such as evaluating recruits’ proficiency in computational thinking, but also non-military priorities like funding for non-defense AI research and training human resource departments to cultivate AI talent.

- In January, the State Department formed a cyberspace bureau in response to the committee’s concerns, Rigler said. Its mission includes upgrading digital security, reducing the likelihood of AI-driven conflict, and ensuring that the U.S. would prevail if conflict were to arise. (Earlier this year, a government audit determined that the bureau had not explained how it would accomplish its goals without interfering with a different agency that has a similar mission.

Yes, but: Critics argue the commission’s promotion of military AI could drive an arms race akin to the one that led the U.S. and Russia to stockpile tens of thousands of nuclear weapons between the 1940s and 1990s. They say that the group’s adversarial stance toward geopolitical competitors could further degrade global stability. Others warn that the resulting relationship between the military and private companies could incentivize conflict.

Why It matters: Technology is moving fast, and the U.S. has lagged other national efforts to keep pace. A defense-focused roadmap is an important step, yet it invites questions about the nation’s ambitions and values.

We’re thinking: The commission’s emphases on accountability, cultivating AI talent, collaborating with allies, and using AI to uphold democratic principles are laudable. At the same time, it raises difficult questions about how to uphold national security in the face of disruptive technologies. We oppose fully autonomous weapons and encourage every member of the AI community to work toward a peaceful, prosperous future that benefits people throughout the world.