Working AI: At the Office with VP of Applied Deep Learning Research Bryan Catanzaro

Title: VP of Applied Deep Learning Research, Nvidia

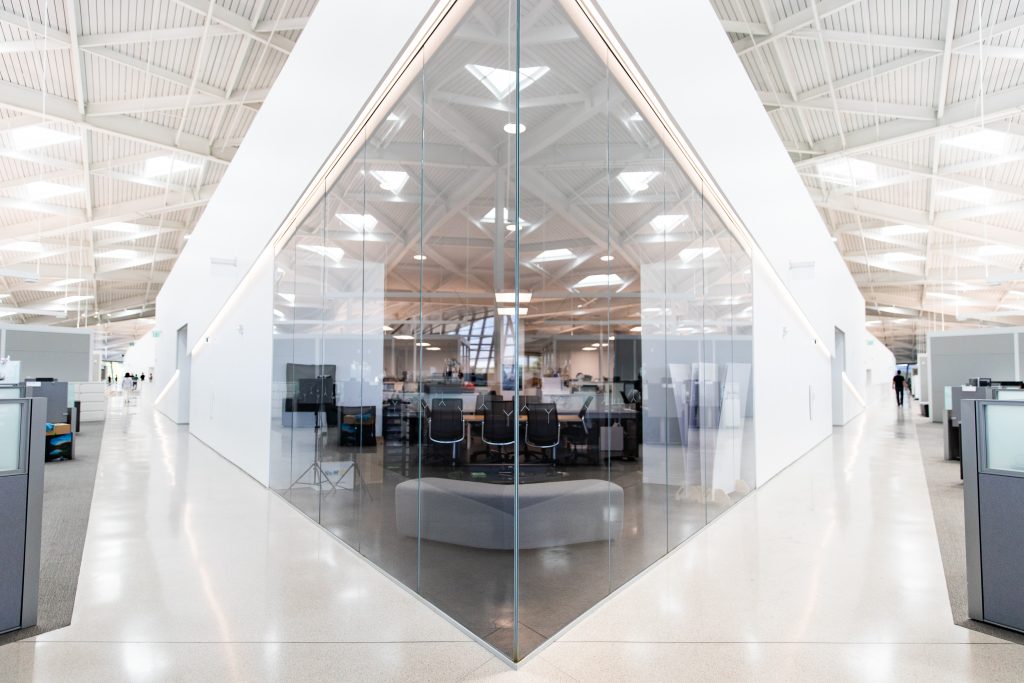

Location: Santa Clara, CA

Age: 39

Education: PhD, EECS, University of California, Berkeley

Years in industry: 8 years + 8 internships

Favorite AI researcher: Ilya Sutskever

When Bryan Catanzaro returned to Nvidia in 2016 to head the new Applied Deep Learning Research lab, he was the only member. Three years later, he’s leading a team of 40 researchers pushing the boundaries of deep learning. The lab creates prototype applications for the company, whose chips dramatically boost neural-network speed and efficiency. Bryan’s position allows him to pursue broad interests that are reflected in publications spanning computer vision, speech recognition, and language processing. Read on to learn about his journey from intern to VP.

How did you first get started in deep learning?

I got started interning at Intel in 2001, building circuits for future CPUs that were supposed to run at 10 GHz. Spoiler alert: we didn’t get there! This experience showed me that sequential processing was running into a wall. So, when I started my PhD at Berkeley in 2005, I looked for applications that had large computational demands and high levels of algorithmic parallelism. This brought me to machine learning.

Back then, neural networks were seen as a little old fashioned and not likely to solve any important problems. Many of us had to change our minds about this once the deep learning revolution started!

After graduating from Berkeley in 2011, I worked at Nvidia Research. That’s how I ended up collaborating with Andrew Ng and his Stanford research group. We wrote a paper that showed how using efficient HPC-oriented software on three GPU-based servers could replace 1,000 CPU-based servers for training deep learning models. That work attracted a lot of attention at Nvidia! I had been working on a little library for neural network computation on the GPU. Nvidia decided to productize this little research prototype, and it became our neural network library, CuDNN.

You went from intern to VP at Nvidia in only a few short jumps. How did you manage that?

I’ve always tried to choose projects based on my own internal beliefs about where technology is going and where I think I can make the biggest difference. Nvidia has also given me the chance to do big things. Nvidia is a visionary company. Perhaps the same instinct that led Nvidia to bet early on deep learning also allowed it to bet on me.

Also, soft skills have been critical to my work. An invention can’t change the world unless other people understand it, so success requires careful communication, patience, and understanding.

What are you and your team working on at Nvidia?

My team is called Applied Deep Learning Research, and we build prototype applications that show new ways for deep learning to solve problems at Nvidia. Our research is focused on four main application domains: computer graphics and vision; speech and audio; natural language processing; and chip design. You can read more about our projects on our website: https://nv-adlr.github.io

Take us through your typical workday.

My day starts with two hours blocked off for reading and planning. I usually spend the rest of the day working with people on the team, either one on one or in groups. We look at the problems we’ve experienced, as well as current progress and then brainstorm next steps. I read and write a lot of email and Slack messages.

What tech stack do you use and why?

We use Python and libraries like NumPy and matplotlib because they are convenient and efficient. We’re a PyTorch-focused team because we have found that PyTorch is the easiest framework in which to do our DL research. We like PyTorch’s simple programming model, which makes it easier to debug our code, as well as its simple implementation, which allows us to easily extend it with custom operators. We schedule jobs on Nvidia DGX-1 and DGX-2 servers using Slurm, which has good support for running multi-node jobs efficiently and fairly.

How is it different to work in ML at a hardware company versus a software company?

Every GPU we design at Nvidia is a multi-billion dollar investment that takes several years to reach the market. This means the company has to make big bets as one team. This wouldn’t be possible without the “speed of light” culture that drives work at Nvidia. We focus on finding the limits of technology and then pushing as close as possible to them. Engineers at Nvidia are typically not impressed by speed-up factors. If you say, “I made something five times faster!”, the response will usually be, “How much faster could it still go?”

Many people don’t know this, but Nvidia has more software engineers than hardware engineers. A GPU is a lot more than just a chip. It gets good performance through amazing compilers, libraries, frameworks, and applications. This is why most of the engineers at Nvidia write software.

Your published research spans image, speech, and language processing, as well as generative modeling. You’re involved in both software and hardware design. Is it an advantage to have such a broad view, or is it a disadvantage not to concentrate in a particular area?

I’ve always been interested in research at the boundaries of established fields, as that’s where I think some of the best opportunities lie. Machine learning as a technology has incredibly broad applications, so I find myself working on all sorts of different problems. It’s been fun for me to be involved in so many different things, but of course there are tradeoffs.

I’ve often felt like a professional outsider – I still remember when I published my first paper at the International Conference on Machine Learning in 2008, some people at the conference asked me why I was there at all! That didn’t feel great. Back then very few people were working on ML systems. And now I work on ML applications at a systems-focused company. Working on many different kinds of applications also means that I’m constantly learning about the details of how ML is applied in many different fields, from graphics to genomics and conversational user interfaces.

Do you have a favorite research project that you feel deserves more attention?

I’m still very proud of my linear-time algorithm for in-place matrix transposition, which has applications for processor design as well as deep learning frameworks. I can still remember the day I finished the proof for the algorithm, it was an incredible feeling. One of these days I’ll get back to rewriting the code. I believe there’s still quite a lot of performance to be had from this algorithm, and it’d also be great to generalize it to tensor transpositions that we need for efficient deep learning.

How do you keep learning?

I follow a bunch of amazing researchers on Twitter, which I find is a great way to hear quickly about interesting new projects. You can see my list here. I also love http://www.arxiv-sanity.com. I make space in my schedule to read papers and books. This week I’m reading the Capacitron and XLNet papers, and revisiting a classic book: Crucial Conversations.

What advice do you have for people trying to break into AI?

It’s not too hard to get started. There are lots of online classes, webpages, and open-source projects to try out. Deep learning as an algorithm works by taking lots of small incremental steps to move to a better place, despite not knowing exactly what direction to go. I think that philosophy works for most things in life: just get started and iterate quickly. You’ll find your way as long as you keep moving and keep adjusting.

Bryan Catanzaro is the VP of Applied Deep Learning Research at Nvidia. You can find him on Twitter and Google scholar.