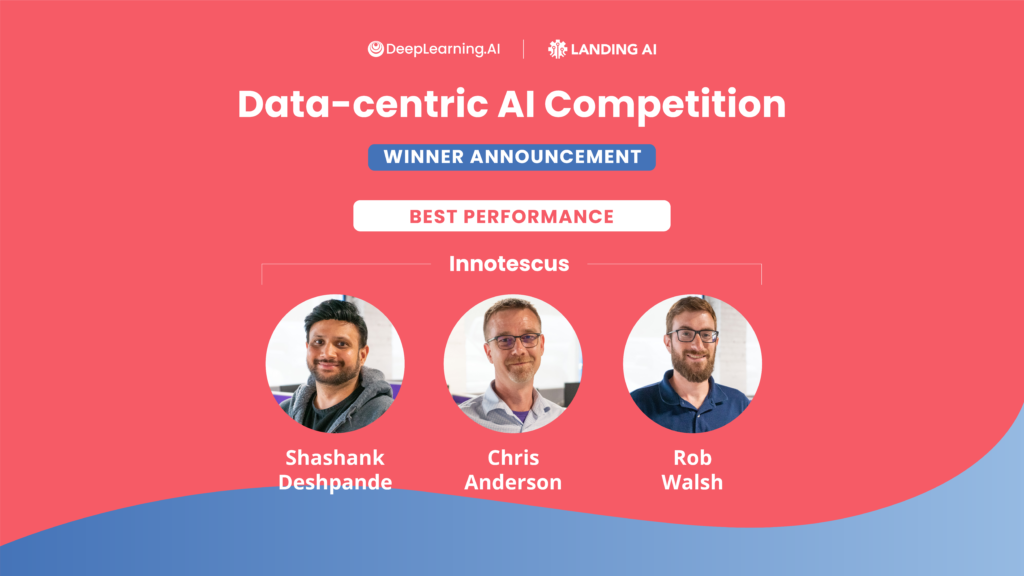

How We Won the First Data-Centric AI Competition: Innotescus

In this blog post, Innotescus, one of the winners of the Data-Centric AI Competition, describes techniques and strategies that led to victory. Participants received a fixed model architecture and a dataset of 1,500 handwritten Roman numerals. Their task was to optimize model performance solely by improving the dataset and dividing it into training and validation sets. The dataset size was capped at 10,000. You can find more details about the competition here.

Your personal journey in AI

Shashank, Chris, and Rob first worked together at ChemImage Corporation, leveraging machine learning with dense hyperspectral imaging datasets to identify chemical signatures. In order to accurately detect concealed explosives, drugs, and different biological structures, the team was routinely required to build high-quality, application-specific training datasets. Building these training datasets was time-intensive—no available off-the-shelf tools could deliver what they needed. After a few years of successfully building high-quality datasets, the team recognized the widespread need for the tools they’d built themselves, and founded Innotescus. Innotescus is a group of scientists, engineers, and entrepreneurs with a vision for better AI. We understand and appreciate the importance of having detailed insights into data, and using those insights to create objectively high-quality data for high-impact machine learning models.

Why you decided to participate in the competition

We decided to enter the Data-Centric AI competition for a few reasons. First, the challenge aligns closely with the mission and vision that we’ve established at Innotescus. Our goal is to illuminate the black box of machine learning with deep insights into data, so being able to effectively curate a dataset is at the core of our focus. Second, this was a great opportunity to put the tools that we’ve built to the test and see how well we stack up. Finally, we feel strongly that the machine learning universe — and the world at large — would benefit immensely from explainable, approachable AI, and we want to lead the movement in that direction. The more we work to demystify the world of machine learning, the faster we can get to safer and more responsible technology that makes the world a better place.

The techniques you used for the Data-Centric AI competition

Our method can be split into two parts: data labeling and balancing data distributions.

Data Labeling

As Prof Andrew Ng and others have highlighted, and as we have seen in our own experiences, the first and arguably largest source of problems in creating a high-quality training dataset comes from data labelling errors and inconsistencies. Having a consistent set of rules for labeling and a strong consensus among annotators/field experts mitigates errors and greatly reduces subjectivity.

For this competition, we broke the dataset cleaning process into three parts:

- Identify noisey images

This was a no-brainer. We removed noisey images from the training set. These images clearly don’t correspond to a particular class, and would be detrimental to model performance.

Imagine being the annotator assigned to these…

2. Identify incorrect classes

We corrected mislabelled data points . Human annotators are prone to mistakes, and having a systematic QA or review process helps identify and eliminate those errors.

Mislabelled images in the Roman-Mnist dataset

3. Identify ambiguous data points

We defined consistent rules for ambiguous data points. For example, in the images shown below, we consider a data point as class 2 if we see a clear gap between the two vertical lines (top row), even when they are at an angle. If there is no identifiable gap, we consider the datapoint as class 5 (bottom row). Having pre-defined rules helped us reduce ambiguity more objectively.

Ambiguous images in the Roman-Mnist dataset. According to our labelling criteria, the top row is labelled as II, while the bottom row is labelled as V.

This three-step process cut down the dataset to a total of 2,228 images, a 22% reduction from the provided dataset. This alone resulted in 73.099% accuracy on the test set, an approximately 9% boost from the baseline performance.

Balancing Data Distributions

When we collect training data in the real world, we arguably capture a snapshot of data in time, invariably introducing hidden biases into our training data. Biased data most times leads to poor learning. One solution is reducing ambiguous data points and ensuring balance along major dimensions of variance within the dataset.

- Rebalancing Training and Testing Datasets

Real world data has a lot of variance built into it. This variance almost always causes unbalanced distributions, especially when observing a specific feature or metric. When augmented, these biases can get amplified. The result? Throwing more data at your model may drive you further away from your goal.

We observed this with two of our submissions in this competition. The two submissions contained the same data, just split differently between training and validation (80:20 and 88:12 respectively). We saw that the addition of 800 images to the training set actually reduced the accuracy on the test set by about 1.5%. After this, our approach shifted from “more data” to “more balanced data.”

- Rebalancing Subclasses Using Embeddings

The first imbalance we observed was in the upper and lowercase distribution within each class. For example, our “cleaned” data contained 90 images of lowercase class 1 and 194 images of uppercase class 1. Staying true to our hypothesis, we needed equal representation (500 images) from each case per class (totalling 10,000 images limit as per the rules of the competition).

Using the Innotescus’ integrated Dive chart visualizations, we can see that within each class, there is an imbalance between lower and upper case numerals.

We then further explored clusters within each uppercase and lowercase subset. We subdivided each case into 3 clusters using K-means clustering on the PCA-reduced ResNet-50 embeddings.

Once we had these clusters (shown below), we simply balanced each of the sub-clusters with augmented images resulting from translation, scale and rotation.

A UMap visualization of clusters – separated by color – obtained on lowercase class

3. Rebalancing Edge Cases with Hard Examples and Augmentation

Towards the end of the competition, we observed that there were certain examples in our validation set that we consistently misclassified. Our goal here was to help the model classify these examples with higher confidence.

We believe that these misclassifications are caused by an underrepresentation of “edge case” examples in our training set. Below is one such example; this is a III being misclassified as a IV.

The ResNet50 output for a hard example. The model classifies the image as a IV with a raw prediction value of 2.793, but the prediction value for class III is only slightly lower at 2.225.

From the model prediction output, we observed that even though we misclassify this example, the values for class III and class IV are very close. We wanted to identify more examples on or near the “decision boundary” and add them to our training set, so we defined a difficulty score as described below:

Where Pomax is the max predicted output and Po2nd maxis the next best predicted value. This describes the percentage difference between the first- and second-most likely predicted values; For the output shown in the example, the difficulty score is 2.793 – 2.2252.793=0.203. We then added a constraint; if the difficulty score is less than 0.5, we consider that as a “hard example.” This process gave us an additional 880 images that we added to our training set.

A sample of the hard examples found using the difficulty score

Additionally, we cropped the images by a few pixels to reduce the white space around the Roman numerals and used different iterations of dilation and erosion. Some of these additional examples are shown above. However, the addition of these 880 “hard” images meant that we had to remove 880 existing images from our validation set. To do this, we studied the histogram distribution of the difficulty score for each class in the training dataset, and matched its distribution in the validation set. Matching the training and validation difficulty scores was the best way to avoid over- or underfitting to the training dataset, and ensure optimal model performance.

Your advice for other learners

Getting started with machine learning has never been easier than today. We have great tutorials, courses, research papers and blogs available at our fingertips. We have powerful environments like Google Colab and frameworks like Tensorflow that allow us to design and experiment with amazing ML algorithms using state-of-the art models with just a few lines of code. However, good training data still remains one of the biggest bottlenecks for creating a deployable solution. Our advice for other learners is to pay particular attention to your data: Garbage in; garbage out.

We at Innotesus firmly believe that having a systematic approach to creating datasets not only improves the performance of our ML solutions, but also significantly helps reduce the time and resources required. We suggest breaking the process down into the following key questions:

- Know your problem – What are you trying to solve? Having a good understanding of the scope of the problem you are trying to solve greatly helps in defining what kind of data is required.

- Know your data – Are your annotations consistent? Define clear rules for annotation and be thorough. Always have a quality control check to catch any annotation errors. Beyond right and wrong, do you understand your data? Explore your data distribution, hidden biases, outliers, etc. so you can a) know the edge cases and limits of your solution, and b) identify holes in the dataset and correct them.

- Know your impact – How do your choices affect the results? Explore and experiment to understand how different dataset splits, augmentations, and data parameters affect your model performance. As we saw when rebalancing our training and testing datasets, more data isn’t always better!